reduce

- paddle.distributed. reduce ( tensor: Tensor, dst: int, op: _ReduceOp = 0, group: Group | None = None, sync_op: bool = True ) task [source]

-

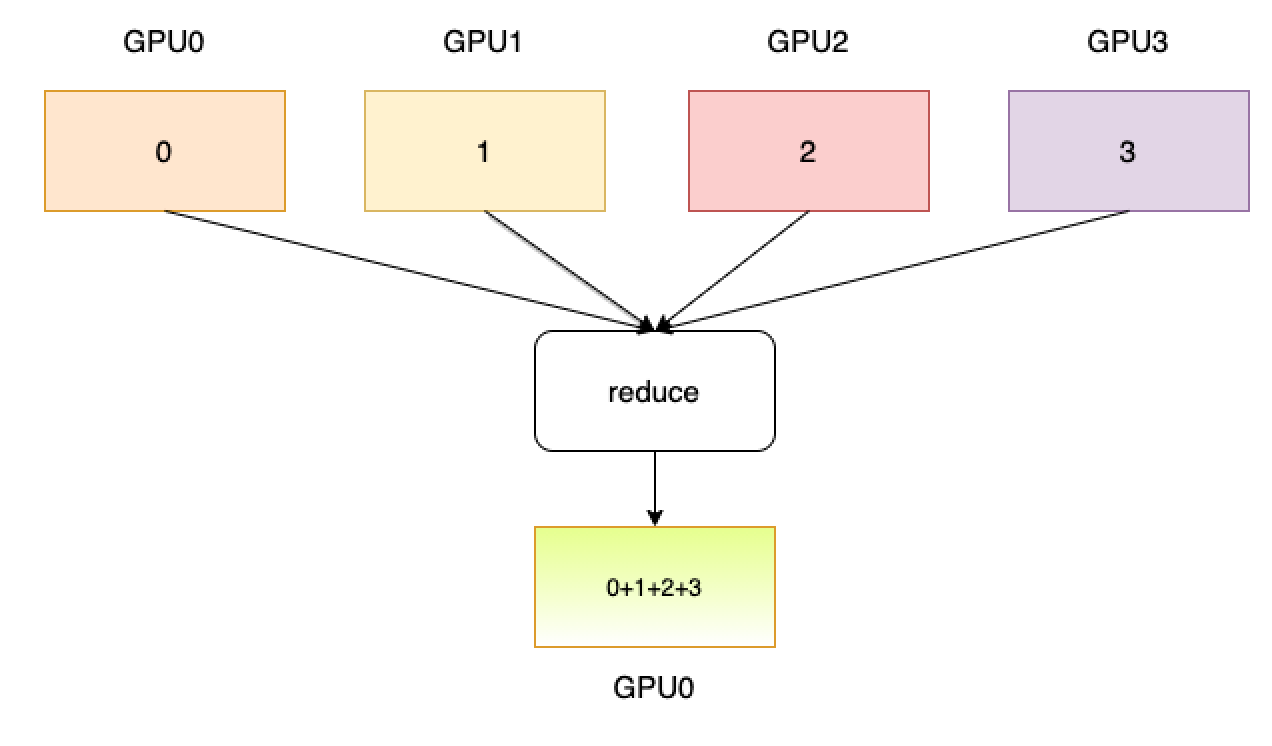

Reduce a tensor to the destination from all others. As shown below, one process is started with a GPU and the data of this process is represented by its group rank. The destination of the reduce operator is GPU0 and the process is sum. Through reduce operator, the GPU0 will owns the sum of all data from all GPUs.

- Parameters

-

tensor (Tensor) – The output Tensor for the destination and the input Tensor otherwise. Its data type should be float16, float32, float64, int32, int64, int8, uint8, bool or bfloat16.

dst (int) – The destination rank id.

op (ReduceOp.SUM|ReduceOp.MAX|ReduceOp.MIN|ReduceOp.PROD|ReduceOp.AVG, optional) – The operation used. Default value is ReduceOp.SUM.

group (Group|None, optional) – The group instance return by new_group or None for global default group.

sync_op (bool, optional) – Whether this op is a sync op. The default value is True.

- Returns

-

Return a task object.

Examples

>>> >>> import paddle >>> import paddle.distributed as dist >>> dist.init_parallel_env() >>> if dist.get_rank() == 0: ... data = paddle.to_tensor([[4, 5, 6], [4, 5, 6]]) >>> else: ... data = paddle.to_tensor([[1, 2, 3], [1, 2, 3]]) >>> dist.reduce(data, dst=0) >>> print(data) >>> # [[5, 7, 9], [5, 7, 9]] (2 GPUs, out for rank 0) >>> # [[1, 2, 3], [1, 2, 3]] (2 GPUs, out for rank 1)