alltoall

- paddle.distributed. alltoall ( out_tensor_list: list[Tensor], in_tensor_list: list[Tensor], group: Group | None = None, sync_op: bool = True ) task [source]

-

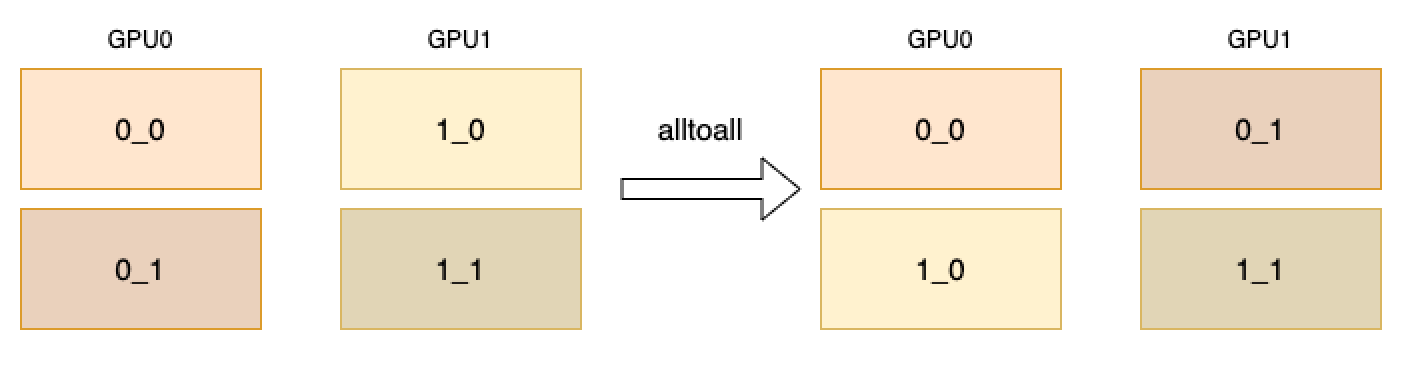

Scatter tensors in in_tensor_list to all participators averagely and gather the result tensors in out_tensor_list. As shown below, the in_tensor_list in GPU0 includes 0_0 and 0_1, and GPU1 includes 1_0 and 1_1. Through alltoall operator, the 0_0 in GPU0 will be sent to GPU0 and 0_1 to GPU1, 1_0 in GPU1 sent to GPU0 and 1_1 to GPU1. Finally the out_tensor_list in GPU0 includes 0_0 and 1_0, and GPU1 includes 0_1 and 1_1.

- Parameters

-

out_tensor_list (List[Tensor]) – List of tensors to be gathered one per rank. The data type of each tensor should be the same as the input tensors.

in_tensor_list (List[Tensor]) – List of tensors to scatter one per rank. The data type of each tensor should be float16, float32, float64, int32, int64, int8, uint8, bool or bfloat16.

group (Group, optional) – The group instance return by new_group or None for global default group. Default: None.

sync_op (bool, optional) – Whether this op is a sync op. The default value is True.

- Returns

-

Return a task object.

Examples

>>> >>> import paddle >>> import paddle.distributed as dist >>> dist.init_parallel_env() >>> # all_to_all with equal split sizes >>> out_tensor_list = [] # type: ignore >>> if dist.get_rank() == 0: ... data1 = paddle.to_tensor([[1, 2, 3], [4, 5, 6]]) ... data2 = paddle.to_tensor([[7, 8, 9], [10, 11, 12]]) >>> else: ... data1 = paddle.to_tensor([[13, 14, 15], [16, 17, 18]]) ... data2 = paddle.to_tensor([[19, 20, 21], [22, 23, 24]]) >>> dist.alltoall(out_tensor_list, [data1, data2]) >>> print(out_tensor_list) >>> # [[[1, 2, 3], [4, 5, 6]], [[13, 14, 15], [16, 17, 18]]] (2 GPUs, out for rank 0) >>> # [[[7, 8, 9], [10, 11, 12]], [[19, 20, 21], [22, 23, 24]]] (2 GPUs, out for rank 1) >>> # all_to_all with unequal split sizes >>> if dist.get_rank() == 0: ... data1 = paddle.to_tensor([[1, 2, 3], [4, 5, 6]]) # shape: (2, 3) ... data2 = paddle.to_tensor([7]) # shape: (1, ) ... out_data1 = paddle.empty((2, 3), dtype=data1.dtype) ... out_data2 = paddle.empty((3, 2), dtype=data1.dtype) >>> else: ... data1 = paddle.to_tensor([[8, 9], [10, 11], [12, 13]]) # shape: (3, 2) ... data2 = paddle.to_tensor([[14, 15, 16, 17]]) # shape: (1, 4) ... out_data1 = paddle.empty((1,), dtype=data1.dtype) ... out_data2 = paddle.empty((1, 4), dtype=data1.dtype) >>> dist.alltoall([out_data1, out_data2], [data1, data2]) >>> print([out_data1, out_data2]) >>> # [[[1, 2, 3], [4, 5, 6]], [[8, 9], [10, 11], [12, 13]]] (2 GPUs, out for rank 0) >>> # [[7], [[14, 15, 16, 17]]] (2 GPUs, out for rank 1)