Tensor

- class paddle. Tensor ( *args, **kwargs ) [source]

-

Tensor is the basic data structure in PaddlePaddle. There are some ways to create a Tensor:

Use the existing

datato create a Tensor, please refer to to_tensor.Create a Tensor with a specified

shape, please refer to ones, zeros, full.Create a Tensor with the same

shapeanddtypeas other Tensor, please refer to ones_like, zeros_like, full_like.

-

abs

(

name: str | None = None

)

Tensor

[source]

abs¶

-

Perform elementwise abs for input x.

\[out = |x|\]- Parameters

-

x (Tensor) – The input Tensor with data type int32, int64, float16, float32, float64, complex64 and complex128.

name (str|None, optional) – Name for the operation (optional, default is None). For more information, please refer to api_guide_Name.

- Returns

-

Tensor.A Tensor with the same data type and shape as \(x\).

Examples

>>> import paddle >>> x = paddle.to_tensor([-0.4, -0.2, 0.1, 0.3]) >>> out = paddle.abs(x) >>> print(out) Tensor(shape=[4], dtype=float32, place=Place(cpu), stop_gradient=True, [0.40000001, 0.20000000, 0.10000000, 0.30000001])

-

abs_

(

name=None

)

abs_¶

-

Inplace version of

absAPI, the output Tensor will be inplaced with inputx. Please refer to abs.

-

acos

(

name: str | None = None

)

Tensor

[source]

acos¶

-

Acos Activation Operator.

\[out = cos^{-1}(x)\]- Parameters

-

x (Tensor) – Input of Acos operator, an N-D Tensor, with data type float32, float64, float16, bfloat16, uint8, int8, int16, int32, int64, complex64 or complex128.

name (str|None, optional) – Name for the operation (optional, default is None). For more information, please refer to api_guide_Name.

- Returns

-

- Tensor. Output of Acos operator, a Tensor with shape same as input

-

(integer types are autocasted into float32).

Examples

>>> import paddle >>> x = paddle.to_tensor([-0.4, -0.2, 0.1, 0.3]) >>> out = paddle.acos(x) >>> print(out) Tensor(shape=[4], dtype=float32, place=Place(cpu), stop_gradient=True, [1.98231316, 1.77215421, 1.47062886, 1.26610363])

-

acos_

(

name=None

)

acos_¶

-

Inplace version of

acosAPI, the output Tensor will be inplaced with inputx. Please refer to acos.

-

acosh

(

name: str | None = None

)

Tensor

[source]

acosh¶

-

Acosh Activation Operator.

\[out = acosh(x)\]- Parameters

-

x (Tensor) – Input of Acosh operator, an N-D Tensor, with data type float32, float64, float16, bfloat16, uint8, int8, int16, int32, int64, complex64 or complex128.

name (str|None, optional) – Name for the operation (optional, default is None). For more information, please refer to api_guide_Name.

- Returns

-

- Tensor. Output of Acosh operator, a Tensor with shape same as input

-

(integer types are autocasted into float32).

Examples

>>> import paddle >>> x = paddle.to_tensor([1., 3., 4., 5.]) >>> out = paddle.acosh(x) >>> print(out) Tensor(shape=[4], dtype=float32, place=Place(cpu), stop_gradient=True, [0. , 1.76274717, 2.06343699, 2.29243159])

-

acosh_

(

name=None

)

acosh_¶

-

Inplace version of

acoshAPI, the output Tensor will be inplaced with inputx. Please refer to acosh.

-

add

(

y: Tensor,

name: str | None = None,

*,

alpha: Number = 1,

out: Tensor | None = None

)

Tensor

[source]

add¶

-

Elementwise Add Operator. Add two tensors element-wise. The equation is:

\[Out=X+Y\]$X$ the tensor of any dimension. $Y$ the tensor whose dimensions must be less than or equal to the dimensions of $X$.

This operator is used in the following cases:

The shape of $Y$ is the same with $X$.

The shape of $Y$ is a continuous subsequence of $X$.

For example:

shape(X) = (2, 3, 4, 5), shape(Y) = (,) shape(X) = (2, 3, 4, 5), shape(Y) = (5,) shape(X) = (2, 3, 4, 5), shape(Y) = (4, 5), with axis=-1(default) or axis=2 shape(X) = (2, 3, 4, 5), shape(Y) = (3, 4), with axis=1 shape(X) = (2, 3, 4, 5), shape(Y) = (2), with axis=0 shape(X) = (2, 3, 4, 5), shape(Y) = (2, 1), with axis=0

Note

Alias Support: The parameter name

inputcan be used as an alias forx, andothercan be used as an alias fory. For example,add(input=tensor_x, other=tensor_y)is equivalent toadd(x=tensor_x, y=tensor_y).- Parameters

-

x (Tensor) – Tensor of any dimensions. Its dtype should be bool, bfloat16, float16, float32, float64, int8, int16, int32, int64, uint8, complex64, complex128. alias:

input.y (Tensor) – Tensor of any dimensions. Its dtype should be bool, bfloat16, float16, float32, float64, int8, int16, int32, int64, uint8, complex64, complex128. alias:

other.alpha (Number, optional) – Scaling factor for Y. Default: 1.

out (Tensor, optional) – The output tensor. Default: None.

name (str|None, optional) – For details, please refer to api_guide_Name. Generally, no setting is required. Default: None.

- Returns

-

N-D Tensor. A location into which the result is stored. It’s dimension equals with x.

Examples

>>> import paddle >>> x = paddle.to_tensor([2, 3, 4], 'float64') >>> y = paddle.to_tensor([1, 5, 2], 'float64') >>> z = paddle.add(x, y) >>> print(z) Tensor(shape=[3], dtype=float64, place=Place(cpu), stop_gradient=True, [3., 8., 6.])

-

add_

(

y: Tensor,

name: str | None = None,

*,

alpha: Number = 1

)

Tensor

add_¶

-

Inplace version of

addAPI, the output Tensor will be inplaced with inputx. Please refer to add.

-

add_n

(

name: str | None = None

)

Tensor

[source]

add_n¶

-

Sum one or more Tensor of the input.

For example:

Case 1: Input: input.shape = [2, 3] input = [[1, 2, 3], [4, 5, 6]] Output: output.shape = [2, 3] output = [[1, 2, 3], [4, 5, 6]] Case 2: Input: First input: input1.shape = [2, 3] Input1 = [[1, 2, 3], [4, 5, 6]] The second input: input2.shape = [2, 3] input2 = [[7, 8, 9], [10, 11, 12]] Output: output.shape = [2, 3] output = [[8, 10, 12], [14, 16, 18]]- Parameters

-

inputs (Tensor|list[Tensor]|tuple[Tensor]) – A Tensor or a list/tuple of Tensors. The shape and data type of the list/tuple elements should be consistent. Input can be multi-dimensional Tensor, and data types can be: bfloat16, float16, float32, float64, int32, int64, complex64, complex128.

name (str|None, optional) – Name for the operation (optional, default is None). For more information, please refer to api_guide_Name.

- Returns

-

Tensor, the sum of input \(inputs\) , its shape and data types are consistent with \(inputs\).

Examples

>>> import paddle >>> input0 = paddle.to_tensor([[1, 2, 3], [4, 5, 6]], dtype='float32') >>> input1 = paddle.to_tensor([[7, 8, 9], [10, 11, 12]], dtype='float32') >>> output = paddle.add_n([input0, input1]) >>> output Tensor(shape=[2, 3], dtype=float32, place=Place(cpu), stop_gradient=True, [[8. , 10., 12.], [14., 16., 18.]])

-

addmm

(

x: Tensor,

y: Tensor,

beta: float = 1.0,

alpha: float = 1.0,

name: str | None = None

)

Tensor

[source]

addmm¶

-

addmm

Perform matrix multiplication for input $x$ and $y$. $input$ is added to the final result. The equation is:

\[Out = alpha * x * y + beta * input\]$Input$, $x$ and $y$ can carry the LoD (Level of Details) information, or not. But the output only shares the LoD information with input $input$.

- Parameters

-

input (Tensor) – The input Tensor to be added to the final result.

x (Tensor) – The first input Tensor for matrix multiplication.

y (Tensor) – The second input Tensor for matrix multiplication.

beta (float, optional) – Coefficient of $input$, default is 1.

alpha (float, optional) – Coefficient of $x*y$, default is 1.

name (str|None, optional) – Name for the operation (optional, default is None). For more information, please refer to api_guide_Name.

- Returns

-

The output Tensor of addmm.

- Return type

-

Tensor

Examples

>>> import paddle >>> x = paddle.ones([2, 2]) >>> y = paddle.ones([2, 2]) >>> input = paddle.ones([2, 2]) >>> out = paddle.addmm(input=input, x=x, y=y, beta=0.5, alpha=5.0) >>> print(out) Tensor(shape=[2, 2], dtype=float32, place=Place(cpu), stop_gradient=True, [[10.50000000, 10.50000000], [10.50000000, 10.50000000]])

-

addmm_

(

x: Tensor,

y: Tensor,

beta: float = 1.0,

alpha: float = 1.0,

name: str | None = None

)

Tensor

[source]

addmm_¶

-

Inplace version of

addmmAPI, the output Tensor will be inplaced with inputinput. Please refer to addmm.

-

all

(

axis: int | Sequence[int] | None = None,

keepdim: bool = False,

name: str | None = None,

*,

out: Tensor | None = None

)

Tensor

[source]

all¶

-

Computes the

logical andof tensor elements over the given dimension.- Parameters

-

x (Tensor) – An N-D Tensor, the input data type should be ‘bool’, ‘float32’, ‘float64’, ‘int32’, ‘int64’, ‘complex64’, ‘complex128’.

axis (int|list|tuple|None, optional) – The dimensions along which the

logical andis compute. IfNone, and all elements ofxand return a Tensor with a single element, otherwise must be in the range \([-rank(x), rank(x))\). If \(axis[i] < 0\), the dimension to reduce is \(rank + axis[i]\).keepdim (bool, optional) – Whether to reserve the reduced dimension in the output Tensor. The result Tensor will have one fewer dimension than the

xunlesskeepdimis true, default value is False.name (str|None, optional) – Name for the operation (optional, default is None). For more information, please refer to api_guide_Name.

- Keyword Arguments

-

out (Tensor|optional) – The output tensor.

- Returns

-

Results the

logical andon the specified axis of input Tensor x, it’s data type is bool. - Return type

-

Tensor

Examples

>>> # type: ignore >>> import paddle >>> # x is a bool Tensor with following elements: >>> # [[True, False] >>> # [True, True]] >>> x = paddle.to_tensor([[1, 0], [1, 1]], dtype='int32') >>> x Tensor(shape=[2, 2], dtype=int32, place=Place(cpu), stop_gradient=True, [[1, 0], [1, 1]]) >>> x = paddle.cast(x, 'bool') >>> # out1 should be False >>> out1 = paddle.all(x) >>> out1 Tensor(shape=[], dtype=bool, place=Place(cpu), stop_gradient=True, False) >>> # out2 should be [True, False] >>> out2 = paddle.all(x, axis=0) >>> out2 Tensor(shape=[2], dtype=bool, place=Place(cpu), stop_gradient=True, [True , False]) >>> # keepdim=False, out3 should be [False, True], out.shape should be (2,) >>> out3 = paddle.all(x, axis=-1) >>> out3 Tensor(shape=[2], dtype=bool, place=Place(cpu), stop_gradient=True, [False, True ]) >>> # keepdim=True, out4 should be [[False], [True]], out.shape should be (2, 1) >>> out4 = paddle.all(x, axis=1, keepdim=True) >>> out4 Tensor(shape=[2, 1], dtype=bool, place=Place(cpu), stop_gradient=True, [[False], [True ]])

-

allclose

(

y: Tensor,

rtol: float = 1e-05,

atol: float = 1e-08,

equal_nan: bool = False,

name: str | None = None

)

Tensor

[source]

allclose¶

-

Check if all \(x\) and \(y\) satisfy the condition:

\[\left| x - y \right| \leq atol + rtol \times \left| y \right|\]elementwise, for all elements of \(x\) and \(y\). This is analogous to \(numpy.allclose\), namely that it returns \(True\) if two tensors are elementwise equal within a tolerance.

- Parameters

-

x (Tensor) – The input tensor, it’s data type should be float16, float32, float64.

y (Tensor) – The input tensor, it’s data type should be float16, float32, float64.

rtol (float, optional) – The relative tolerance. Default: \(1e-5\) .

atol (float, optional) – The absolute tolerance. Default: \(1e-8\) .

equal_nan (bool, optional) – ${equal_nan_comment}. Default: False.

name (str|None, optional) – Name for the operation. For more information, please refer to api_guide_Name. Default: None.

- Returns

-

The output tensor, it’s data type is bool.

- Return type

-

Tensor

Examples

>>> import paddle >>> x = paddle.to_tensor([10000., 1e-07]) >>> y = paddle.to_tensor([10000.1, 1e-08]) >>> result1 = paddle.allclose(x, y, rtol=1e-05, atol=1e-08, equal_nan=False, name="ignore_nan") >>> print(result1) Tensor(shape=[], dtype=bool, place=Place(cpu), stop_gradient=True, False) >>> result2 = paddle.allclose(x, y, rtol=1e-05, atol=1e-08, equal_nan=True, name="equal_nan") >>> print(result2) Tensor(shape=[], dtype=bool, place=Place(cpu), stop_gradient=True, False) >>> x = paddle.to_tensor([1.0, float('nan')]) >>> y = paddle.to_tensor([1.0, float('nan')]) >>> result1 = paddle.allclose(x, y, rtol=1e-05, atol=1e-08, equal_nan=False, name="ignore_nan") >>> print(result1) Tensor(shape=[], dtype=bool, place=Place(cpu), stop_gradient=True, False) >>> result2 = paddle.allclose(x, y, rtol=1e-05, atol=1e-08, equal_nan=True, name="equal_nan") >>> print(result2) Tensor(shape=[], dtype=bool, place=Place(cpu), stop_gradient=True, True)

-

amax

(

axis: int | Sequence[int] | None = None,

keepdim: bool = False,

name: str | None = None,

*,

out: Tensor | None = None

)

Tensor

[source]

amax¶

-

Computes the maximum of tensor elements over the given axis.

Note

The difference between max and amax is: If there are multiple maximum elements, amax evenly distributes gradient between these equal values, while max propagates gradient to all of them.

- Parameters

-

x (Tensor) – A tensor, the data type is float32, float64, int32, int64, the dimension is no more than 4.

axis (int|list|tuple|None, optional) – The axis along which the maximum is computed. If

None, compute the maximum over all elements of x and return a Tensor with a single element, otherwise must be in the range \([-x.ndim(x), x.ndim(x))\). If \(axis[i] < 0\), the axis to reduce is \(x.ndim + axis[i]\).keepdim (bool, optional) – Whether to reserve the reduced dimension in the output Tensor. The result tensor will have one fewer dimension than the x unless

keepdimis true, default value is False.out (Tensor|None, optional) – Output tensor. If provided in dynamic graph, the result will be written to this tensor and also returned. The returned tensor and out share memory and autograd meta. Default: None.

name (str|None, optional) – Name for the operation (optional, default is None). For more information, please refer to api_guide_Name.

- Keyword Arguments

-

out (Tensor, optional) – The output tensor.

- Returns

-

Tensor, results of maximum on the specified axis of input tensor, it’s data type is the same as x.

Examples

>>> # type: ignore >>> import paddle >>> # data_x is a Tensor with shape [2, 4] with multiple maximum elements >>> # the axis is a int element >>> x = paddle.to_tensor([[0.1, 0.9, 0.9, 0.9], ... [0.9, 0.9, 0.6, 0.7]], ... dtype='float64', stop_gradient=False) >>> # There are 5 maximum elements: >>> # 1) amax evenly distributes gradient between these equal values, >>> # thus the corresponding gradients are 1/5=0.2; >>> # 2) while max propagates gradient to all of them, >>> # thus the corresponding gradient are 1. >>> result1 = paddle.amax(x) >>> result1.backward() >>> result1 Tensor(shape=[], dtype=float64, place=Place(cpu), stop_gradient=False, 0.90000000) >>> x.grad Tensor(shape=[2, 4], dtype=float64, place=Place(cpu), stop_gradient=False, [[0. , 0.20000000, 0.20000000, 0.20000000], [0.20000000, 0.20000000, 0. , 0. ]]) >>> x.clear_grad() >>> result1_max = paddle.max(x) >>> result1_max.backward() >>> result1_max Tensor(shape=[], dtype=float64, place=Place(cpu), stop_gradient=False, 0.90000000) >>> x.clear_grad() >>> result2 = paddle.amax(x, axis=0) >>> result2.backward() >>> result2 Tensor(shape=[4], dtype=float64, place=Place(cpu), stop_gradient=False, [0.90000000, 0.90000000, 0.90000000, 0.90000000]) >>> x.grad Tensor(shape=[2, 4], dtype=float64, place=Place(cpu), stop_gradient=False, [[0. , 0.50000000, 1. , 1. ], [1. , 0.50000000, 0. , 0. ]]) >>> x.clear_grad() >>> result3 = paddle.amax(x, axis=-1) >>> result3.backward() >>> result3 Tensor(shape=[2], dtype=float64, place=Place(cpu), stop_gradient=False, [0.90000000, 0.90000000]) >>> x.grad Tensor(shape=[2, 4], dtype=float64, place=Place(cpu), stop_gradient=False, [[0. , 0.33333333, 0.33333333, 0.33333333], [0.50000000, 0.50000000, 0. , 0. ]]) >>> x.clear_grad() >>> result4 = paddle.amax(x, axis=1, keepdim=True) >>> result4.backward() >>> result4 Tensor(shape=[2, 1], dtype=float64, place=Place(cpu), stop_gradient=False, [[0.90000000], [0.90000000]]) >>> x.grad Tensor(shape=[2, 4], dtype=float64, place=Place(cpu), stop_gradient=False, [[0. , 0.33333333, 0.33333333, 0.33333333], [0.50000000, 0.50000000, 0. , 0. ]]) >>> # data_y is a Tensor with shape [2, 2, 2] >>> # the axis is list >>> y = paddle.to_tensor([[[0.1, 0.9], [0.9, 0.9]], ... [[0.9, 0.9], [0.6, 0.7]]], ... dtype='float64', stop_gradient=False) >>> result5 = paddle.amax(y, axis=[1, 2]) >>> result5.backward() >>> result5 Tensor(shape=[2], dtype=float64, place=Place(cpu), stop_gradient=False, [0.90000000, 0.90000000]) >>> y.grad Tensor(shape=[2, 2, 2], dtype=float64, place=Place(cpu), stop_gradient=False, [[[0. , 0.33333333], [0.33333333, 0.33333333]], [[0.50000000, 0.50000000], [0. , 0. ]]]) >>> y.clear_grad() >>> result6 = paddle.amax(y, axis=[0, 1]) >>> result6.backward() >>> result6 Tensor(shape=[2], dtype=float64, place=Place(cpu), stop_gradient=False, [0.90000000, 0.90000000]) >>> y.grad Tensor(shape=[2, 2, 2], dtype=float64, place=Place(cpu), stop_gradient=False, [[[0. , 0.33333333], [0.50000000, 0.33333333]], [[0.50000000, 0.33333333], [0. , 0. ]]])

-

amin

(

axis: int | Sequence[int] | None = None,

keepdim: bool = False,

name: str | None = None,

*,

out: Tensor | None = None

)

Tensor

[source]

amin¶

-

Computes the minimum of tensor elements over the given axis

Note

The difference between min and amin is: If there are multiple minimum elements, amin evenly distributes gradient between these equal values, while min propagates gradient to all of them.

- Parameters

-

x (Tensor) – A tensor, the data type is float32, float64, int32, int64, the dimension is no more than 4.

axis (int|list|tuple|None, optional) – The axis along which the minimum is computed. If

None, compute the minimum over all elements of x and return a Tensor with a single element, otherwise must be in the range \([-x.ndim, x.ndim)\). If \(axis[i] < 0\), the axis to reduce is \(x.ndim + axis[i]\).keepdim (bool, optional) – Whether to reserve the reduced dimension in the output Tensor. The result tensor will have one fewer dimension than the x unless

keepdimis true, default value is False.out (Tensor|None, optional) – Output tensor. If provided in dynamic graph, the result will be written to this tensor and also returned. The returned tensor and out share memory and autograd meta. Default: None.

name (str|None, optional) – Name for the operation (optional, default is None). For more information, please refer to api_guide_Name.

- Returns

-

Tensor, results of minimum on the specified axis of input tensor, it’s data type is the same as input’s Tensor.

- Keyword Arguments

-

out (Tensor, optional) – The output tensor.

Examples

>>> # type: ignore >>> import paddle >>> # data_x is a Tensor with shape [2, 4] with multiple minimum elements >>> # the axis is a int element >>> x = paddle.to_tensor([[0.2, 0.1, 0.1, 0.1], ... [0.1, 0.1, 0.6, 0.7]], ... dtype='float64', stop_gradient=False) >>> # There are 5 minimum elements: >>> # 1) amin evenly distributes gradient between these equal values, >>> # thus the corresponding gradients are 1/5=0.2; >>> # 2) while min propagates gradient to all of them, >>> # thus the corresponding gradient are 1. >>> result1 = paddle.amin(x) >>> result1.backward() >>> result1 Tensor(shape=[], dtype=float64, place=Place(cpu), stop_gradient=False, 0.10000000) >>> x.grad Tensor(shape=[2, 4], dtype=float64, place=Place(cpu), stop_gradient=False, [[0. , 0.20000000, 0.20000000, 0.20000000], [0.20000000, 0.20000000, 0. , 0. ]]) >>> x.clear_grad() >>> result1_min = paddle.min(x) >>> result1_min.backward() >>> result1_min Tensor(shape=[], dtype=float64, place=Place(cpu), stop_gradient=False, 0.10000000) >>> x.clear_grad() >>> result2 = paddle.amin(x, axis=0) >>> result2.backward() >>> result2 Tensor(shape=[4], dtype=float64, place=Place(cpu), stop_gradient=False, [0.10000000, 0.10000000, 0.10000000, 0.10000000]) >>> x.grad Tensor(shape=[2, 4], dtype=float64, place=Place(cpu), stop_gradient=False, [[0. , 0.50000000, 1. , 1. ], [1. , 0.50000000, 0. , 0. ]]) >>> x.clear_grad() >>> result3 = paddle.amin(x, axis=-1) >>> result3.backward() >>> result3 Tensor(shape=[2], dtype=float64, place=Place(cpu), stop_gradient=False, [0.10000000, 0.10000000]) >>> x.grad Tensor(shape=[2, 4], dtype=float64, place=Place(cpu), stop_gradient=False, [[0. , 0.33333333, 0.33333333, 0.33333333], [0.50000000, 0.50000000, 0. , 0. ]]) >>> x.clear_grad() >>> result4 = paddle.amin(x, axis=1, keepdim=True) >>> result4.backward() >>> result4 Tensor(shape=[2, 1], dtype=float64, place=Place(cpu), stop_gradient=False, [[0.10000000], [0.10000000]]) >>> x.grad Tensor(shape=[2, 4], dtype=float64, place=Place(cpu), stop_gradient=False, [[0. , 0.33333333, 0.33333333, 0.33333333], [0.50000000, 0.50000000, 0. , 0. ]]) >>> # data_y is a Tensor with shape [2, 2, 2] >>> # the axis is list >>> y = paddle.to_tensor([[[0.2, 0.1], [0.1, 0.1]], ... [[0.1, 0.1], [0.6, 0.7]]], ... dtype='float64', stop_gradient=False) >>> result5 = paddle.amin(y, axis=[1, 2]) >>> result5.backward() >>> result5 Tensor(shape=[2], dtype=float64, place=Place(cpu), stop_gradient=False, [0.10000000, 0.10000000]) >>> y.grad Tensor(shape=[2, 2, 2], dtype=float64, place=Place(cpu), stop_gradient=False, [[[0. , 0.33333333], [0.33333333, 0.33333333]], [[0.50000000, 0.50000000], [0. , 0. ]]]) >>> y.clear_grad() >>> result6 = paddle.amin(y, axis=[0, 1]) >>> result6.backward() >>> result6 Tensor(shape=[2], dtype=float64, place=Place(cpu), stop_gradient=False, [0.10000000, 0.10000000]) >>> y.grad Tensor(shape=[2, 2, 2], dtype=float64, place=Place(cpu), stop_gradient=False, [[[0. , 0.33333333], [0.50000000, 0.33333333]], [[0.50000000, 0.33333333], [0. , 0. ]]])

-

angle

(

name: str | None = None

)

Tensor

[source]

angle¶

-

Element-wise angle of complex numbers. For non-negative real numbers, the angle is 0 while for negative real numbers, the angle is \(\pi\), and NaNs are propagated..

- Equation:

-

\[angle(x)=arctan2(x.imag, x.real)\]

- Parameters

-

x (Tensor) – An N-D Tensor, the data type is complex64, complex128, or float32, float64 .

name (str|None, optional) – Name for the operation (optional, default is None). For more information, please refer to api_guide_Name.

- Returns

-

An N-D Tensor of real data type with the same precision as that of x’s data type.

- Return type

-

Tensor

Examples

>>> import paddle >>> x = paddle.to_tensor([-2, -1, 0, 1]).unsqueeze(-1).astype('float32') >>> y = paddle.to_tensor([-2, -1, 0, 1]).astype('float32') >>> z = x + 1j * y >>> z Tensor(shape=[4, 4], dtype=complex64, place=Place(cpu), stop_gradient=True, [[(-2-2j), (-2-1j), (-2+0j), (-2+1j)], [(-1-2j), (-1-1j), (-1+0j), (-1+1j)], [-2j , -1j , 0j , 1j ], [ (1-2j), (1-1j), (1+0j), (1+1j)]]) >>> theta = paddle.angle(z) >>> theta Tensor(shape=[4, 4], dtype=float32, place=Place(cpu), stop_gradient=True, [[-2.35619450, -2.67794514, 3.14159274, 2.67794514], [-2.03444386, -2.35619450, 3.14159274, 2.35619450], [-1.57079637, -1.57079637, 0. , 1.57079637], [-1.10714877, -0.78539819, 0. , 0.78539819]])

-

any

(

axis: int | Sequence[int] | None = None,

keepdim: bool = False,

name: str | None = None,

*,

out: Tensor | None = None

)

Tensor

[source]

any¶

-

Computes the

logical orof tensor elements over the given dimension, and return the result.Note

Alias Support: The parameter name

inputcan be used as an alias forx, and the parameter namedimcan be used as an alias foraxis. For example,any(input=tensor_x, dim=1)is equivalent toany(x=tensor_x, axis=1).- Parameters

-

x (Tensor) – An N-D Tensor, the input data type should be ‘bool’, ‘float32’, ‘float64’, ‘int32’, ‘int64’, ‘complex64’, ‘complex128’. alias:

input.axis (int|list|tuple|None, optional) – The dimensions along which the

logical oris compute. IfNone, and all elements ofxand return a Tensor with a single element, otherwise must be in the range \([-rank(x), rank(x))\). If \(axis[i] < 0\), the dimension to reduce is \(rank + axis[i]\). alias:dim.keepdim (bool, optional) – Whether to reserve the reduced dimension in the output Tensor. The result Tensor will have one fewer dimension than the

xunlesskeepdimis true, default value is False.name (str|None, optional) – Name for the operation (optional, default is None). For more information, please refer to api_guide_Name.

out (Tensor|None, optional) – The output tensor. Default: None.

- Returns

-

Results the

logical oron the specified axis of input Tensor x, it’s data type is bool. - Return type

-

Tensor

Examples

>>> import paddle >>> # type: ignore >>> x = paddle.to_tensor([[1, 0], [1, 1]], dtype='int32') >>> x = paddle.assign(x) >>> x Tensor(shape=[2, 2], dtype=int32, place=Place(cpu), stop_gradient=True, [[1, 0], [1, 1]]) >>> x = paddle.cast(x, 'bool') >>> # x is a bool Tensor with following elements: >>> # [[True, False] >>> # [True, True]] >>> # out1 should be True >>> out1 = paddle.any(x) >>> out1 Tensor(shape=[], dtype=bool, place=Place(cpu), stop_gradient=True, True) >>> # out2 should be [True, True] >>> out2 = paddle.any(x, axis=0) >>> out2 Tensor(shape=[2], dtype=bool, place=Place(cpu), stop_gradient=True, [True, True]) >>> # keepdim=False, out3 should be [True, True], out.shape should be (2,) >>> out3 = paddle.any(x, axis=-1) >>> out3 Tensor(shape=[2], dtype=bool, place=Place(cpu), stop_gradient=True, [True, True]) >>> # keepdim=True, result should be [[True], [True]], out.shape should be (2,1) >>> out4 = paddle.any(x, axis=1, keepdim=True) >>> out4 Tensor(shape=[2, 1], dtype=bool, place=Place(cpu), stop_gradient=True, [[True], [True]])

-

apply

(

func: Callable[[Tensor], Tensor]

)

Tensor

apply¶

-

Apply the python function to the tensor.

- Returns

-

None

Examples

>>> import paddle >>> x = paddle.to_tensor([[0.3, 0.5, 0.1], >>> [0.9, 0.9, 0.7], >>> [0.4, 0.8, 0.2]]).to("cpu", "float64") >>> f = lambda x: 3*x+2 >>> y = x.apply(f) >>> print(y) Tensor(shape=[3, 3], dtype=float64, place=Place(cpu), stop_gradient=True, [[2.90000004, 3.50000000, 2.30000000], [4.69999993, 4.69999993, 4.09999996], [3.20000002, 4.40000004, 2.60000001]]) >>> x = paddle.to_tensor([[0.3, 0.5, 0.1], >>> [0.9, 0.9, 0.7], >>> [0.4, 0.8, 0.2]]).to("cpu", "float16") >>> y = x.apply(f) >>> x = paddle.to_tensor([[0.3, 0.5, 0.1], >>> [0.9, 0.9, 0.7], >>> [0.4, 0.8, 0.2]]).to("cpu", "bfloat16") >>> y = x.apply(f) >>> if paddle.is_compiled_with_cuda(): >>> x = paddle.to_tensor([[0.3, 0.5, 0.1], >>> [0.9, 0.9, 0.7], >>> [0.4, 0.8, 0.2]]).to("gpu", "float32") >>> y = x.apply(f)

-

apply_

(

func: Callable[[Tensor], Tensor]

)

Tensor

apply_¶

-

Inplace apply the python function to the tensor.

- Returns

-

None

Examples

>>> import paddle >>> x = paddle.to_tensor([[0.3, 0.5, 0.1], >>> [0.9, 0.9, 0.7], >>> [0.4, 0.8, 0.2]]).to("cpu", "float64") >>> f = lambda x: 3*x+2 >>> x.apply_(f) >>> print(x) Tensor(shape=[3, 3], dtype=float64, place=Place(cpu), stop_gradient=True, [[2.90000004, 3.50000000, 2.30000000], [4.69999993, 4.69999993, 4.09999996], [3.20000002, 4.40000004, 2.60000001]]) >>> x = paddle.to_tensor([[0.3, 0.5, 0.1], >>> [0.9, 0.9, 0.7], >>> [0.4, 0.8, 0.2]]).to("cpu", "float16") >>> x.apply_(f) >>> x = paddle.to_tensor([[0.3, 0.5, 0.1], >>> [0.9, 0.9, 0.7], >>> [0.4, 0.8, 0.2]]).to("cpu", "bfloat16") >>> x.apply_(f) >>> if paddle.is_compiled_with_cuda(): >>> x = paddle.to_tensor([[0.3, 0.5, 0.1], >>> [0.9, 0.9, 0.7], >>> [0.4, 0.8, 0.2]]).to("gpu", "float32") >>> x.apply_(f)

-

argmax

(

axis: int | None = None,

keepdim: bool = False,

dtype: DTypeLike = 'int64',

name: str | None = None

)

Tensor

[source]

argmax¶

-

Computes the indices of the max elements of the input tensor’s element along the provided axis.

- Parameters

-

x (Tensor) – An input N-D Tensor with type float16, float32, float64, int16, int32, int64, uint8.

axis (int|None, optional) – Axis to compute indices along. The effective range is [-R, R), where R is x.ndim. when axis < 0, it works the same way as axis + R. Default is None, the input x will be into the flatten tensor, and selecting the min value index.

keepdim (bool, optional) – Whether to keep the given axis in output. If it is True, the dimensions will be same as input x and with size one in the axis. Otherwise the output dimensions is one fewer than x since the axis is squeezed. Default is False.

dtype (str|np.dtype, optional) – Data type of the output tensor which can be int32, int64. The default value is

int64, and it will return the int64 indices.name (str|None, optional) – For details, please refer to api_guide_Name. Generally, no setting is required. Default: None.

- Returns

-

Tensor, return the tensor of int32 if set

dtypeis int32, otherwise return the tensor of int64.

Examples

>>> import paddle >>> x = paddle.to_tensor([[5,8,9,5], ... [0,0,1,7], ... [6,9,2,4]]) >>> out1 = paddle.argmax(x) >>> print(out1.numpy()) 2 >>> out2 = paddle.argmax(x, axis=0) >>> print(out2.numpy()) [2 2 0 1] >>> out3 = paddle.argmax(x, axis=-1) >>> print(out3.numpy()) [2 3 1] >>> out4 = paddle.argmax(x, axis=0, keepdim=True) >>> print(out4.numpy()) [[2 2 0 1]]

-

argmin

(

axis: int | None = None,

keepdim: bool = False,

dtype: DTypeLike = 'int64',

name: str | None = None

)

Tensor

[source]

argmin¶

-

Computes the indices of the min elements of the input tensor’s element along the provided axis.

- Parameters

-

x (Tensor) – An input N-D Tensor with type float16, float32, float64, int16, int32, int64, uint8.

axis (int|None, optional) – Axis to compute indices along. The effective range is [-R, R), where R is x.ndim. when axis < 0, it works the same way as axis + R. Default is None, the input x will be into the flatten tensor, and selecting the min value index.

keepdim (bool, optional) – Whether to keep the given axis in output. If it is True, the dimensions will be same as input x and with size one in the axis. Otherwise the output dimensions is one fewer than x since the axis is squeezed. Default is False.

dtype (str|np.dtype, optional) – Data type of the output tensor which can be int32, int64. The default value is ‘int64’, and it will return the int64 indices.

name (str|None, optional) – For details, please refer to api_guide_Name. Generally, no setting is required. Default: None.

- Returns

-

Tensor, return the tensor of int32 if set

dtypeis int32, otherwise return the tensor of int64.

Examples

>>> import paddle >>> x = paddle.to_tensor([[5,8,9,5], ... [0,0,1,7], ... [6,9,2,4]]) >>> out1 = paddle.argmin(x) >>> print(out1.numpy()) 4 >>> out2 = paddle.argmin(x, axis=0) >>> print(out2.numpy()) [1 1 1 2] >>> out3 = paddle.argmin(x, axis=-1) >>> print(out3.numpy()) [0 0 2] >>> out4 = paddle.argmin(x, axis=0, keepdim=True) >>> print(out4.numpy()) [[1 1 1 2]]

-

argsort

(

axis: int = -1,

descending: bool = False,

stable: bool = False,

name: str | None = None

)

Tensor

[source]

argsort¶

-

Sorts the input along the given axis, and returns the corresponding index tensor for the sorted output values. The default sort algorithm is ascending, if you want the sort algorithm to be descending, you must set the

descendingas True.Note

Alias Support: The parameter name

inputcan be used as an alias forx, and the parameter namedimcan be used as an alias foraxis. For example,argsort(input=tensor_x, dim=1)is equivalent to(x=tensor_x, axis=1).- Parameters

-

x (Tensor) – An input N-D Tensor with type bfloat16, float16, float32, float64, int16, int32, int64, uint8. alias:

input.axis (int, optional) – Axis to compute indices along. The effective range is [-R, R), where R is Rank(x). when axis<0, it works the same way as axis+R. Default is -1. alias:

dim.descending (bool, optional) – Descending is a flag, if set to true, algorithm will sort by descending order, else sort by ascending order. Default is false.

stable (bool, optional) – Whether to use stable sorting algorithm or not. When using stable sorting algorithm, the order of equivalent elements will be preserved. Default is False.

name (str|None, optional) – For details, please refer to api_guide_Name. Generally, no setting is required. Default: None.

- Returns

-

Tensor, sorted indices(with the same shape as

xand with data type int64).

Examples

>>> import paddle >>> x = paddle.to_tensor([[[5,8,9,5], ... [0,0,1,7], ... [6,9,2,4]], ... [[5,2,4,2], ... [4,7,7,9], ... [1,7,0,6]]], ... dtype='float32') >>> out1 = paddle.argsort(x, axis=-1) >>> out2 = paddle.argsort(x, axis=0) >>> out3 = paddle.argsort(x, axis=1) >>> print(out1) Tensor(shape=[2, 3, 4], dtype=int64, place=Place(cpu), stop_gradient=True, [[[0, 3, 1, 2], [0, 1, 2, 3], [2, 3, 0, 1]], [[1, 3, 2, 0], [0, 1, 2, 3], [2, 0, 3, 1]]]) >>> print(out2) Tensor(shape=[2, 3, 4], dtype=int64, place=Place(cpu), stop_gradient=True, [[[0, 1, 1, 1], [0, 0, 0, 0], [1, 1, 1, 0]], [[1, 0, 0, 0], [1, 1, 1, 1], [0, 0, 0, 1]]]) >>> print(out3) Tensor(shape=[2, 3, 4], dtype=int64, place=Place(cpu), stop_gradient=True, [[[1, 1, 1, 2], [0, 0, 2, 0], [2, 2, 0, 1]], [[2, 0, 2, 0], [1, 1, 0, 2], [0, 2, 1, 1]]]) >>> x = paddle.to_tensor([1, 0]*40, dtype='float32') >>> out1 = paddle.argsort(x, stable=False) >>> out2 = paddle.argsort(x, stable=True) >>> print(out1) Tensor(shape=[80], dtype=int64, place=Place(cpu), stop_gradient=True, [55, 29, 31, 33, 35, 37, 39, 41, 43, 45, 47, 49, 51, 53, 1 , 57, 59, 61, 63, 65, 67, 69, 71, 73, 75, 77, 79, 17, 11, 13, 25, 7 , 3 , 27, 23, 19, 15, 5 , 21, 9 , 10, 64, 62, 68, 60, 58, 8 , 66, 14, 6 , 70, 72, 4 , 74, 76, 2 , 78, 0 , 20, 28, 26, 30, 32, 24, 34, 36, 22, 38, 40, 12, 42, 44, 18, 46, 48, 16, 50, 52, 54, 56]) >>> print(out2) Tensor(shape=[80], dtype=int64, place=Place(cpu), stop_gradient=True, [1 , 3 , 5 , 7 , 9 , 11, 13, 15, 17, 19, 21, 23, 25, 27, 29, 31, 33, 35, 37, 39, 41, 43, 45, 47, 49, 51, 53, 55, 57, 59, 61, 63, 65, 67, 69, 71, 73, 75, 77, 79, 0 , 2 , 4 , 6 , 8 , 10, 12, 14, 16, 18, 20, 22, 24, 26, 28, 30, 32, 34, 36, 38, 40, 42, 44, 46, 48, 50, 52, 54, 56, 58, 60, 62, 64, 66, 68, 70, 72, 74, 76, 78])

-

argwhere

(

)

Tensor

[source]

argwhere¶

-

Return a tensor containing the indices of all non-zero elements of the input tensor. The returned tensor has shape [z, n], where z is the number of all non-zero elements in the input tensor, and n is the number of dimensions in the input tensor.

- Parameters

-

input (Tensor) – The input tensor variable.

- Returns

-

Tensor, The data type is int64.

Examples

>>> import paddle >>> x = paddle.to_tensor([[1.0, 0.0, 0.0], ... [0.0, 2.0, 0.0], ... [0.0, 0.0, 3.0]]) >>> out = paddle.tensor.search.argwhere(x) >>> print(out) Tensor(shape=[3, 2], dtype=int64, place=Place(cpu), stop_gradient=True, [[0, 0], [1, 1], [2, 2]])

-

as_complex

(

name: str | None = None

)

Tensor

[source]

as_complex¶

-

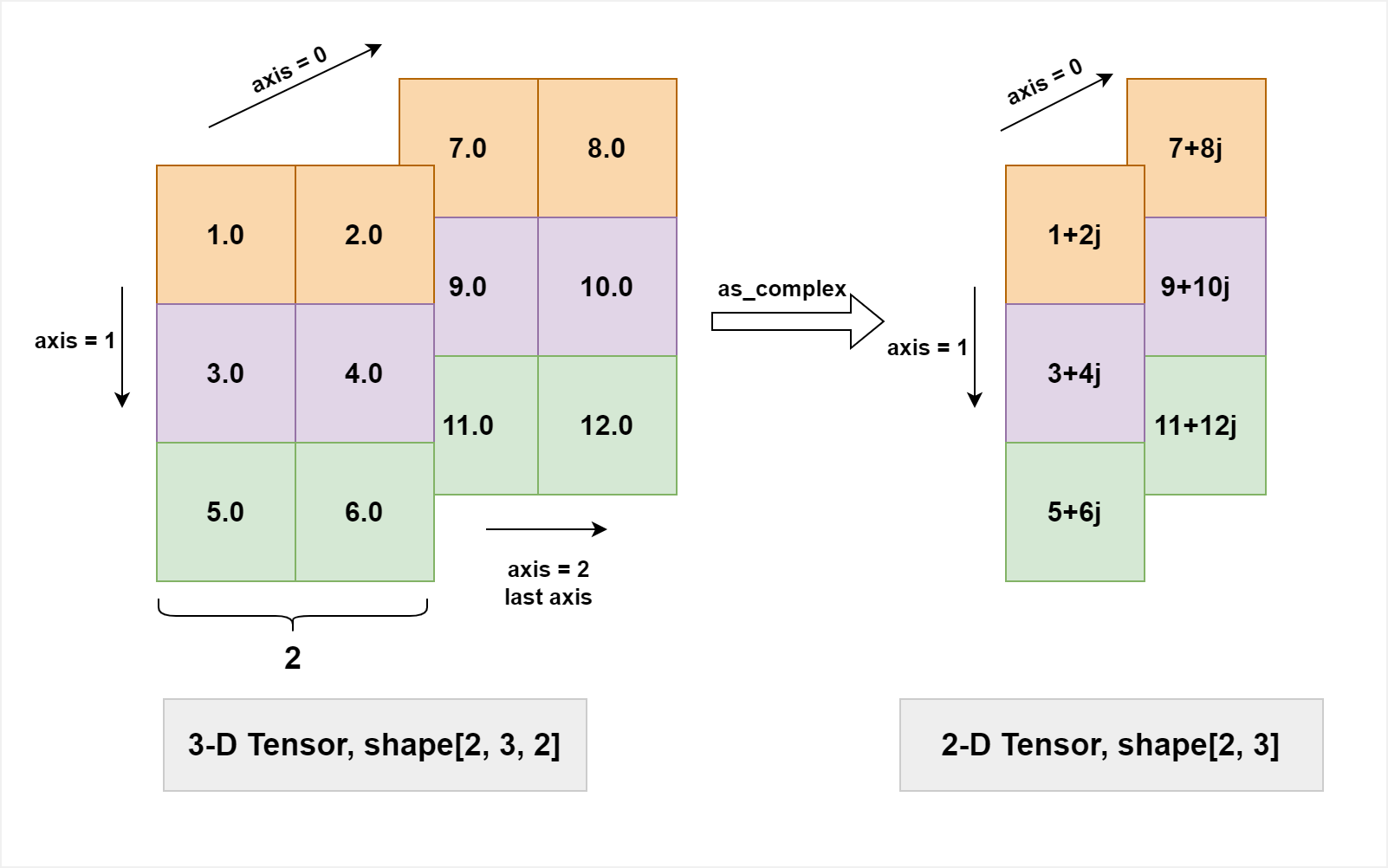

Transform a real tensor to a complex tensor.

The data type of the input tensor is ‘float32’ or ‘float64’, and the data type of the returned tensor is ‘complex64’ or ‘complex128’, respectively.

The shape of the input tensor is

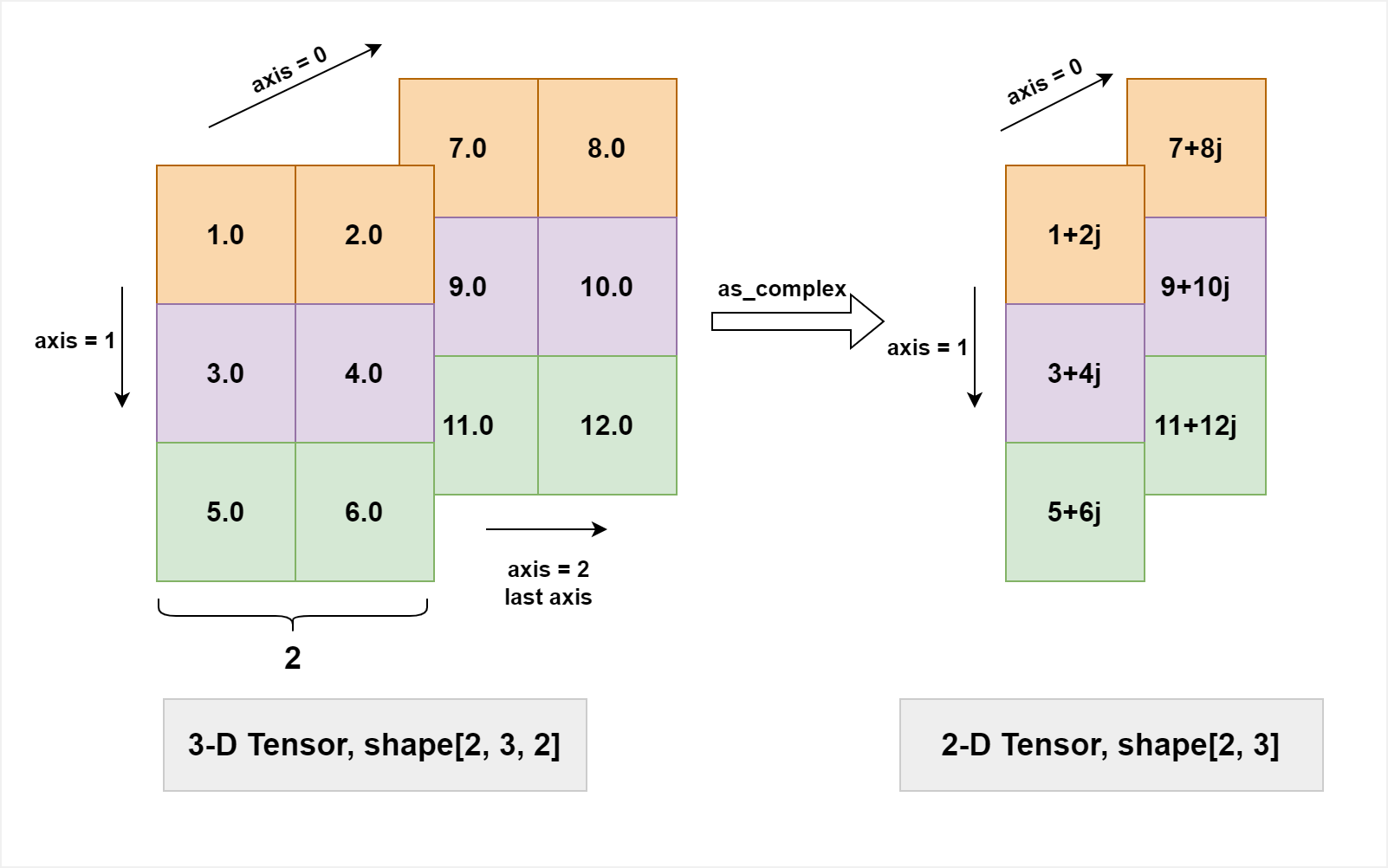

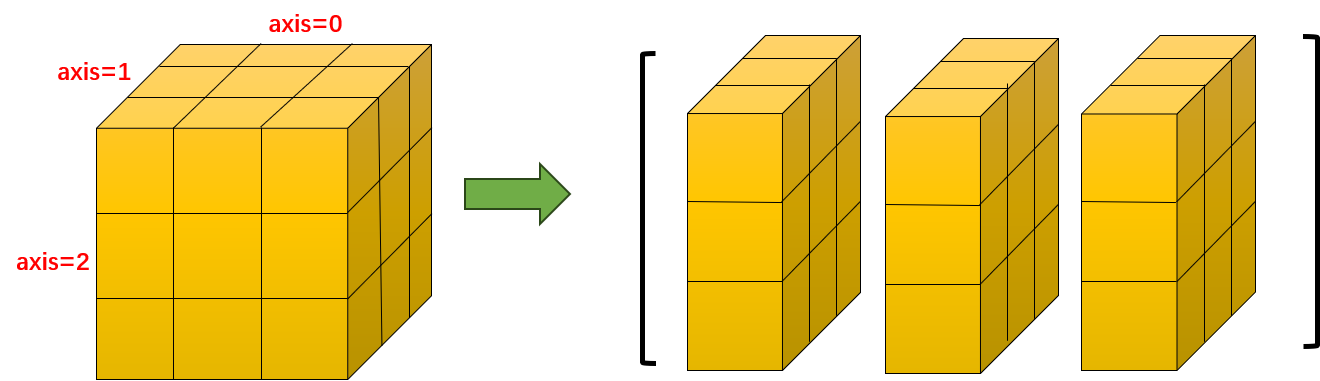

(* ,2), (*means arbitrary shape), i.e. the size of the last axis should be 2, which represent the real and imag part of a complex number. The shape of the returned tensor is(*,).The image below demonstrates the case that a real 3D-tensor with shape [2, 3, 2] is transformed into a complex 2D-tensor with shape [2, 3].

- Parameters

-

x (Tensor) – The input tensor. Data type is ‘float32’ or ‘float64’.

name (str|None, optional) – Name for the operation (optional, default is None). For more information, please refer to api_guide_Name.

- Returns

-

Tensor, The output. Data type is ‘complex64’ or ‘complex128’, with the same precision as the input.

Examples

>>> import paddle >>> x = paddle.arange(12, dtype=paddle.float32).reshape([2, 3, 2]) >>> y = paddle.as_complex(x) >>> print(y) Tensor(shape=[2, 3], dtype=complex64, place=Place(cpu), stop_gradient=True, [[1j , (2+3j) , (4+5j) ], [(6+7j) , (8+9j) , (10+11j)]])

-

as_real

(

name: str | None = None

)

Tensor

[source]

as_real¶

-

Transform a complex tensor to a real tensor.

The data type of the input tensor is ‘complex64’ or ‘complex128’, and the data type of the returned tensor is ‘float32’ or ‘float64’, respectively.

When the shape of the input tensor is

(*, ), (*means arbitrary shape), the shape of the output tensor is(*, 2), i.e. the shape of the output is the shape of the input appended by an extra2.- Parameters

-

x (Tensor) – The input tensor. Data type is ‘complex64’ or ‘complex128’.

name (str|None, optional) – Name for the operation (optional, default is None). For more information, please refer to api_guide_Name.

- Returns

-

Tensor, The output. Data type is ‘float32’ or ‘float64’, with the same precision as the input.

Examples

>>> import paddle >>> x = paddle.arange(12, dtype=paddle.float32).reshape([2, 3, 2]) >>> y = paddle.as_complex(x) >>> z = paddle.as_real(y) >>> print(z) Tensor(shape=[2, 3, 2], dtype=float32, place=Place(cpu), stop_gradient=True, [[[0. , 1. ], [2. , 3. ], [4. , 5. ]], [[6. , 7. ], [8. , 9. ], [10., 11.]]])

-

as_strided

(

shape: Sequence[int],

stride: Sequence[int],

offset: int = 0,

name: str | None = None

)

Tensor

[source]

as_strided¶

-

View x with specified shape, stride and offset.

Note that the output Tensor will share data with origin Tensor and doesn’t have a Tensor copy in

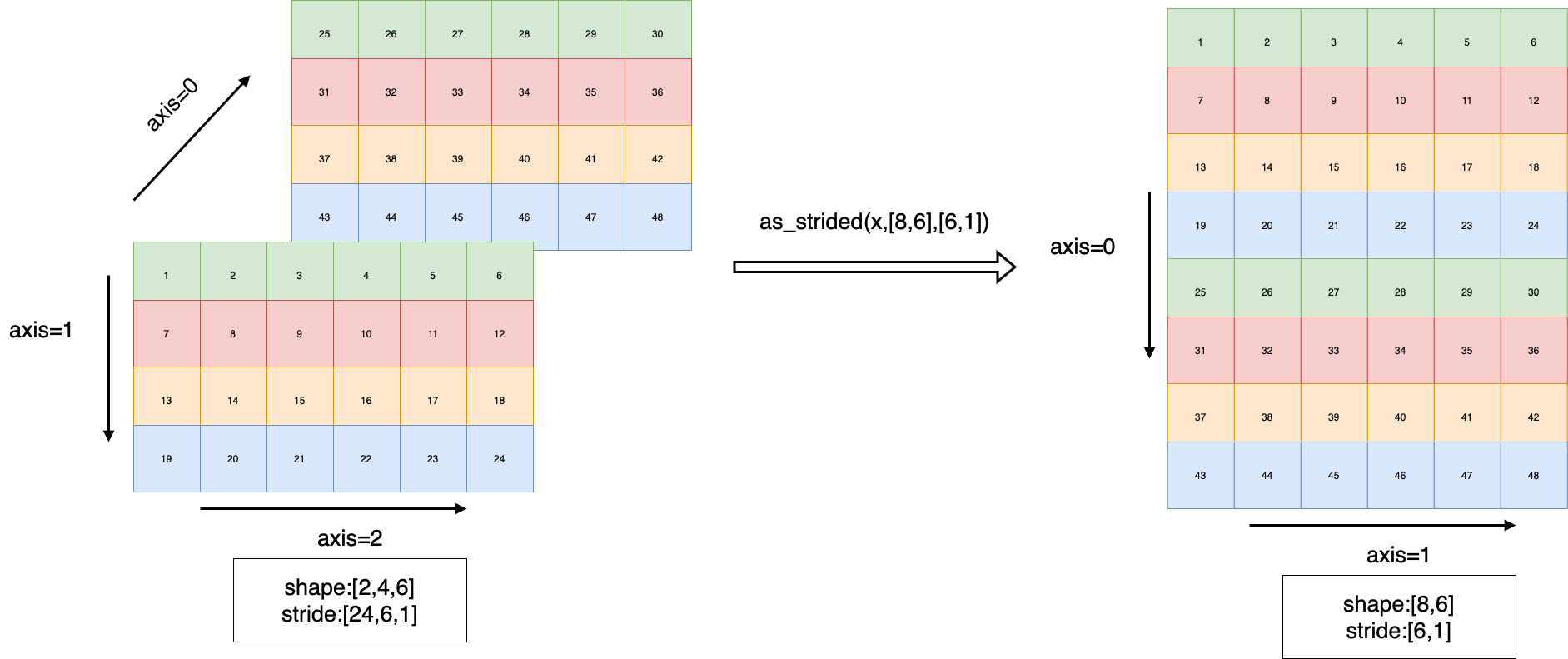

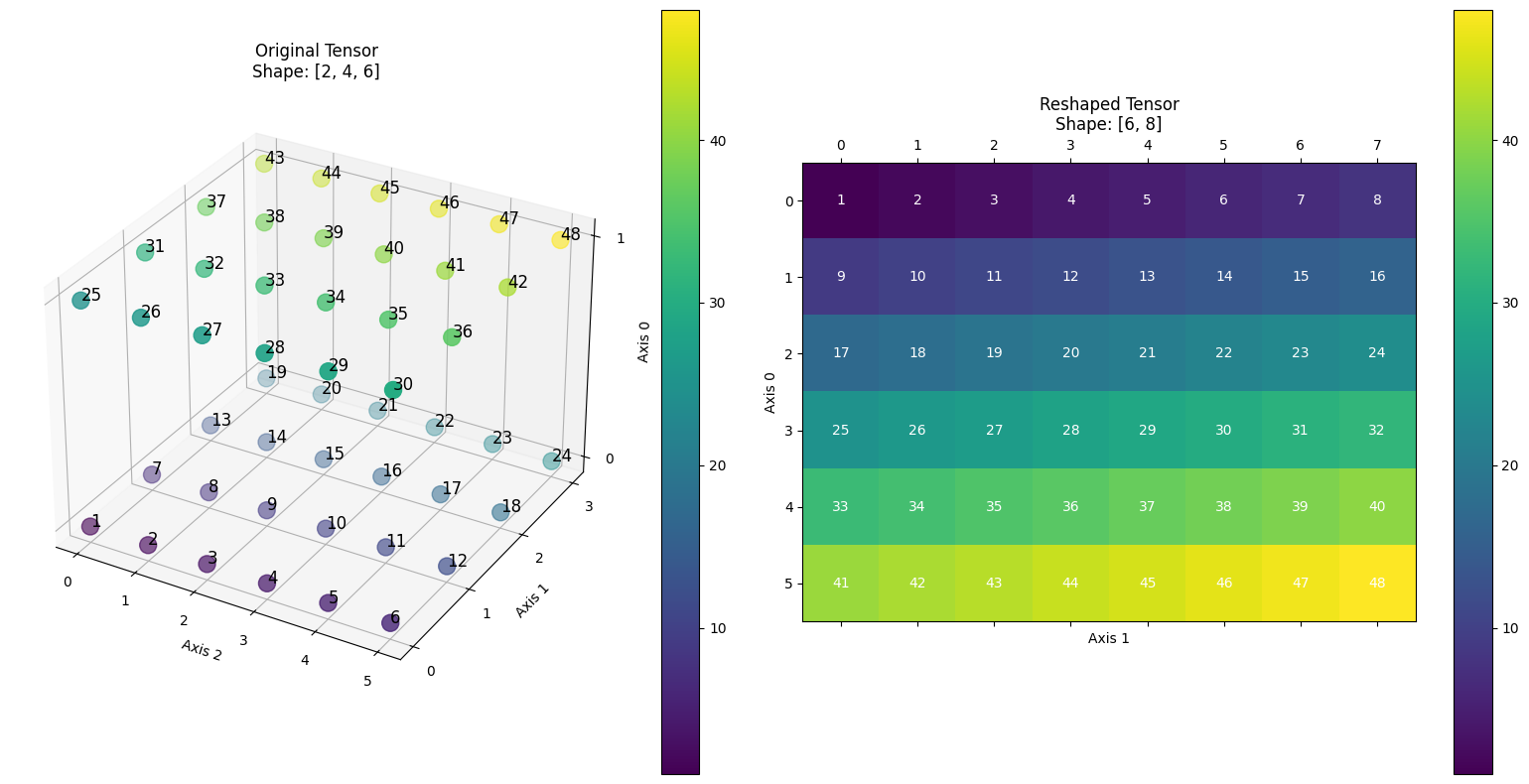

dygraphmode.The following image illustrates an example: transforming an input Tensor with shape [2,4,6] into a Tensor with

shape [8,6]andstride [6,1].

- Parameters

-

x (Tensor) – An N-D Tensor. The data type is

float32,float64,int32,int64orboolshape (list|tuple) – Define the target shape. Each element of it should be integer.

stride (list|tuple) – Define the target stride. Each element of it should be integer.

offset (int, optional) – Define the target Tensor’s offset from x’s holder. Default: 0.

name (str|None, optional) – Name for the operation (optional, default is None). For more information, please refer to api_guide_Name.

- Returns

-

Tensor, A as_strided Tensor with the same data type as

x.

Examples

>>> import paddle >>> paddle.base.set_flags({"FLAGS_use_stride_kernel": True}) >>> x = paddle.rand([2, 4, 6], dtype="float32") >>> out = paddle.as_strided(x, [8, 6], [6, 1]) >>> print(out.shape) [8, 6] >>> # the stride is [6, 1].

-

asin

(

name: str | None = None

)

Tensor

[source]

asin¶

-

Arcsine Operator.

\[out = sin^{-1}(x)\]- Parameters

-

x (Tensor) – Input of Asin operator, an N-D Tensor, with data type float32, float64, float16, bfloat16, uint8, int8, int16, int32, int64, complex64 or complex128.

name (str|None, optional) – Name for the operation (optional, default is None). For more information, please refer to api_guide_Name.

- Returns

-

- Tensor. Same shape and dtype as input

-

(integer types are autocasted into float32).

Examples

>>> import paddle >>> x = paddle.to_tensor([-0.4, -0.2, 0.1, 0.3]) >>> out = paddle.asin(x) >>> print(out) Tensor(shape=[4], dtype=float32, place=Place(cpu), stop_gradient=True, [-0.41151685, -0.20135793, 0.10016742, 0.30469266])

-

asin_

(

name=None

)

asin_¶

-

Inplace version of

asinAPI, the output Tensor will be inplaced with inputx. Please refer to asin.

-

asinh

(

name: str | None = None

)

Tensor

[source]

asinh¶

-

Asinh Activation Operator.

\[out = asinh(x)\]- Parameters

-

x (Tensor) – Input of Asinh operator, an N-D Tensor, with data type float32, float64, float16, bfloat16, uint8, int8, int16, int32, int64, complex64 or complex128.

name (str|None, optional) – Name for the operation (optional, default is None). For more information, please refer to api_guide_Name.

- Returns

-

- Tensor. Output of Asinh operator, a Tensor with shape same as input

-

(integer types are autocasted into float32).

Examples

>>> import paddle >>> x = paddle.to_tensor([-0.4, -0.2, 0.1, 0.3]) >>> out = paddle.asinh(x) >>> print(out) Tensor(shape=[4], dtype=float32, place=Place(cpu), stop_gradient=True, [-0.39003533, -0.19869010, 0.09983408, 0.29567307])

-

asinh_

(

name=None

)

asinh_¶

-

Inplace version of

asinhAPI, the output Tensor will be inplaced with inputx. Please refer to asinh.

-

astype

(

dtype: DTypeLike

)

Tensor

astype¶

-

Cast a Tensor to a specified data type if it differs from the current dtype; otherwise, return the original Tensor.

- Parameters

-

dtype – The target data type.

- Returns

-

a new Tensor with target dtype

- Return type

-

Tensor

Examples

>>> import paddle >>> import numpy as np >>> original_tensor = paddle.ones([2, 2]) >>> print("original tensor's dtype is: {}".format(original_tensor.dtype)) original tensor's dtype is: paddle.float32 >>> new_tensor = original_tensor.astype('float32') >>> print("new tensor's dtype is: {}".format(new_tensor.dtype)) new tensor's dtype is: paddle.float32

-

atan

(

name: str | None = None

)

Tensor

[source]

atan¶

-

Arctangent Operator.

\[out = tan^{-1}(x)\]- Parameters

-

x (Tensor) – Input of Atan operator, an N-D Tensor, with data type float32, float64, float16, bfloat16, uint8, int8, int16, int32, int64, complex64 or complex128.

name (str|None, optional) – Name for the operation (optional, default is None). For more information, please refer to api_guide_Name.

- Returns

-

- Tensor. Same shape and dtype as input x

-

(integer types are autocasted into float32).

Examples

>>> import paddle >>> x = paddle.to_tensor([-0.4, -0.2, 0.1, 0.3]) >>> out = paddle.atan(x) >>> print(out) Tensor(shape=[4], dtype=float32, place=Place(cpu), stop_gradient=True, [-0.38050640, -0.19739556, 0.09966865, 0.29145682])

-

atan2

(

y: Tensor,

name: str | None = None

)

Tensor

[source]

atan2¶

-

Element-wise arctangent of x/y with consideration of the quadrant.

- Equation:

-

\[\begin{split}atan2(x,y)=\left\{\begin{matrix} & tan^{-1}(\frac{x}{y}) & y > 0 \\ & tan^{-1}(\frac{x}{y}) + \pi & x>=0, y < 0 \\ & tan^{-1}(\frac{x}{y}) - \pi & x<0, y < 0 \\ & +\frac{\pi}{2} & x>0, y = 0 \\ & -\frac{\pi}{2} & x<0, y = 0 \\ &\text{undefined} & x=0, y = 0 \end{matrix}\right.\end{split}\]

- Parameters

-

x (Tensor) – An N-D Tensor, the data type is int32, int64, float16, float32, float64.

y (Tensor) – An N-D Tensor, must have the same type as x.

name (str|None, optional) – Name for the operation (optional, default is None). For more information, please refer to api_guide_Name.

- Returns

-

An N-D Tensor, the shape and data type is the same with input (The output data type is float64 when the input data type is int).

- Return type

-

out (Tensor)

Examples

>>> import paddle >>> x = paddle.to_tensor([-1, +1, +1, -1]).astype('float32') >>> x Tensor(shape=[4], dtype=float32, place=Place(cpu), stop_gradient=True, [-1, 1, 1, -1]) >>> y = paddle.to_tensor([-1, -1, +1, +1]).astype('float32') >>> y Tensor(shape=[4], dtype=float32, place=Place(cpu), stop_gradient=True, [-1, -1, 1, 1]) >>> out = paddle.atan2(x, y) >>> out Tensor(shape=[4], dtype=float32, place=Place(cpu), stop_gradient=True, [-2.35619450, 2.35619450, 0.78539819, -0.78539819])

-

atan_

(

name=None

)

atan_¶

-

Inplace version of

atanAPI, the output Tensor will be inplaced with inputx. Please refer to atan.

-

atanh

(

name: str | None = None

)

Tensor

[source]

atanh¶

-

Atanh Activation Operator.

\[out = atanh(x)\]- Parameters

-

x (Tensor) – Input of Atan operator, an N-D Tensor, with data type float32, float64, float16, bfloat16, uint8, int8, int16, int32, int64, complex64 or complex128.

name (str|None, optional) – Name for the operation (optional, default is None). For more information, please refer to api_guide_Name.

- Returns

-

- Tensor. Output of Atanh operator, a Tensor with shape same as input

-

(integer types are autocasted into float32).

Examples

>>> import paddle >>> x = paddle.to_tensor([-0.4, -0.2, 0.1, 0.3]) >>> out = paddle.atanh(x) >>> print(out) Tensor(shape=[4], dtype=float32, place=Place(cpu), stop_gradient=True, [-0.42364895, -0.20273255, 0.10033534, 0.30951962])

-

atanh_

(

name=None

)

atanh_¶

-

Inplace version of

atanhAPI, the output Tensor will be inplaced with inputx. Please refer to atanh.

-

atleast_1d

(

*,

name=None

)

[source]

atleast_1d¶

-

Convert inputs to tensors and return the view with at least 1-dimension. Scalar inputs are converted, one or high-dimensional inputs are preserved.

- Parameters

-

inputs (list[Tensor]) – One or more tensors. The data type is

float16,float32,float64,int16,int32,int64,int8,uint8,complex64,complex128,bfloat16orbool.name (str|None, optional) – Name for the operation (optional, default is None). For more information, please refer to api_guide_Name.

- Returns

-

One Tensor, if there is only one input. List of Tensors, if there are more than one inputs.

Examples

>>> import paddle >>> # one input >>> x = paddle.to_tensor(123, dtype='int32') >>> out = paddle.atleast_1d(x) >>> print(out) Tensor(shape=[1], dtype=int32, place=Place(cpu), stop_gradient=True, [123]) >>> # more than one inputs >>> x = paddle.to_tensor(123, dtype='int32') >>> y = paddle.to_tensor([1.23], dtype='float32') >>> out = paddle.atleast_1d(x, y) >>> print(out) [Tensor(shape=[1], dtype=int32, place=Place(cpu), stop_gradient=True, [123]), Tensor(shape=[1], dtype=float32, place=Place(cpu), stop_gradient=True, [1.23000002])] >>> # more than 1-D input >>> x = paddle.to_tensor(123, dtype='int32') >>> y = paddle.to_tensor([[1.23]], dtype='float32') >>> out = paddle.atleast_1d(x, y) >>> print(out) [Tensor(shape=[1], dtype=int32, place=Place(cpu), stop_gradient=True, [123]), Tensor(shape=[1, 1], dtype=float32, place=Place(cpu), stop_gradient=True, [[1.23000002]])]

-

atleast_2d

(

*,

name=None

)

[source]

atleast_2d¶

-

Convert inputs to tensors and return the view with at least 2-dimension. Two or high-dimensional inputs are preserved.

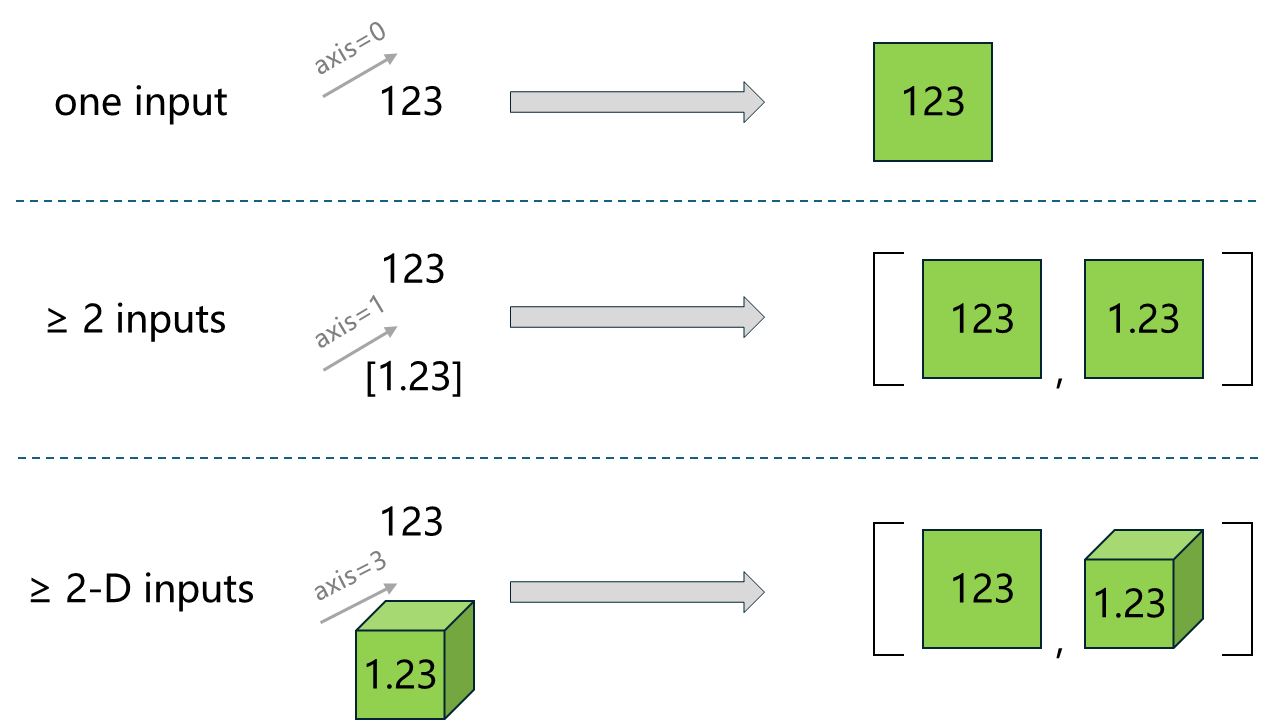

The following diagram illustrates the behavior of atleast_2d on different dimensional inputs for the following cases:

A 0-dim tensor input.

A 0-dim tensor and a 1-dim tensor input.

A 0-dim tensor and a 3-dim tensor input.

In each case, the function returns the tensors (or a list of tensors) in views with at least 2 dimensions.

- Parameters

-

inputs (Tensor|list(Tensor)) – One or more tensors. The data type is

float16,float32,float64,int16,int32,int64,int8,uint8,complex64,complex128,bfloat16orbool.name (str|None, optional) – Name for the operation (optional, default is None). For more information, please refer to api_guide_Name.

- Returns

-

One Tensor, if there is only one input. List of Tensors, if there are more than one inputs.

Examples

>>> import paddle >>> # one input >>> x = paddle.to_tensor(123, dtype='int32') >>> out = paddle.atleast_2d(x) >>> print(out) Tensor(shape=[1, 1], dtype=int32, place=Place(cpu), stop_gradient=True, [[123]]) >>> # more than one inputs >>> x = paddle.to_tensor(123, dtype='int32') >>> y = paddle.to_tensor([1.23], dtype='float32') >>> out = paddle.atleast_2d(x, y) >>> print(out) [Tensor(shape=[1, 1], dtype=int32, place=Place(cpu), stop_gradient=True, [[123]]), Tensor(shape=[1, 1], dtype=float32, place=Place(cpu), stop_gradient=True, [[1.23000002]])] >>> # more than 2-D input >>> x = paddle.to_tensor(123, dtype='int32') >>> y = paddle.to_tensor([[[1.23]]], dtype='float32') >>> out = paddle.atleast_2d(x, y) >>> print(out) [Tensor(shape=[1, 1], dtype=int32, place=Place(cpu), stop_gradient=True, [[123]]), Tensor(shape=[1, 1, 1], dtype=float32, place=Place(cpu), stop_gradient=True, [[[1.23000002]]])]

-

atleast_3d

(

*,

name=None

)

[source]

atleast_3d¶

-

Convert inputs to tensors and return the view with at least 3-dimension. Three or high-dimensional inputs are preserved.

- Parameters

-

inputs (Tensor|list(Tensor)) – One or more tensors. The data type is

float16,float32,float64,int16,int32,int64,int8,uint8,complex64,complex128,bfloat16orbool.name (str|None, optional) – Name for the operation (optional, default is None). For more information, please refer to api_guide_Name.

- Returns

-

One Tensor, if there is only one input. List of Tensors, if there are more than one inputs.

Examples

>>> import paddle >>> # one input >>> x = paddle.to_tensor(123, dtype='int32') >>> out = paddle.atleast_3d(x) >>> print(out) Tensor(shape=[1, 1, 1], dtype=int32, place=Place(cpu), stop_gradient=True, [[[123]]]) >>> # more than one inputs >>> x = paddle.to_tensor(123, dtype='int32') >>> y = paddle.to_tensor([1.23], dtype='float32') >>> out = paddle.atleast_3d(x, y) >>> print(out) [Tensor(shape=[1, 1, 1], dtype=int32, place=Place(cpu), stop_gradient=True, [[[123]]]), Tensor(shape=[1, 1, 1], dtype=float32, place=Place(cpu), stop_gradient=True, [[[1.23000002]]])] >>> # more than 3-D input >>> x = paddle.to_tensor(123, dtype='int32') >>> y = paddle.to_tensor([[[[1.23]]]], dtype='float32') >>> out = paddle.atleast_3d(x, y) >>> print(out) [Tensor(shape=[1, 1, 1], dtype=int32, place=Place(cpu), stop_gradient=True, [[[123]]]), Tensor(shape=[1, 1, 1, 1], dtype=float32, place=Place(cpu), stop_gradient=True, [[[[1.23000002]]]])]

-

backward

(

grad_tensor: Tensor | None = None,

retain_graph: bool = False

)

None

backward¶

-

Run backward of current Graph which starts from current Tensor.

The new gradient will accumulate on previous gradient.

You can clear gradient by

Tensor.clear_grad().- Parameters

-

grad_tensor (Tensor|None, optional) – initial gradient values of the current Tensor. If grad_tensor is None, the initial gradient values of the current Tensor would be Tensor filled with 1.0; if grad_tensor is not None, it must have the same length as the current Tensor. The default value is None.

retain_graph (bool, optional) – If False, the graph used to compute grads will be freed. If you would like to add more ops to the built graph after calling this method(

backward), set the parameterretain_graphto True, then the grads will be retained. Thus, setting it to False is much more memory-efficient. Defaults to False.

- Returns

-

None

Examples

>>> import paddle >>> x = paddle.to_tensor(5., stop_gradient=False) >>> for i in range(5): ... y = paddle.pow(x, 4.0) ... y.backward() ... print("{}: {}".format(i, x.grad)) 0: Tensor(shape=[], dtype=float32, place=Place(cpu), stop_gradient=False, 500.) 1: Tensor(shape=[], dtype=float32, place=Place(cpu), stop_gradient=False, 1000.) 2: Tensor(shape=[], dtype=float32, place=Place(cpu), stop_gradient=False, 1500.) 3: Tensor(shape=[], dtype=float32, place=Place(cpu), stop_gradient=False, 2000.) 4: Tensor(shape=[], dtype=float32, place=Place(cpu), stop_gradient=False, 2500.) >>> x.clear_grad() >>> print("{}".format(x.grad)) Tensor(shape=[], dtype=float32, place=Place(cpu), stop_gradient=False, 0.) >>> grad_tensor=paddle.to_tensor(2.) >>> for i in range(5): ... y = paddle.pow(x, 4.0) ... y.backward(grad_tensor) ... print("{}: {}".format(i, x.grad)) 0: Tensor(shape=[], dtype=float32, place=Place(cpu), stop_gradient=False, 1000.) 1: Tensor(shape=[], dtype=float32, place=Place(cpu), stop_gradient=False, 2000.) 2: Tensor(shape=[], dtype=float32, place=Place(cpu), stop_gradient=False, 3000.) 3: Tensor(shape=[], dtype=float32, place=Place(cpu), stop_gradient=False, 4000.) 4: Tensor(shape=[], dtype=float32, place=Place(cpu), stop_gradient=False, 5000.)

-

baddbmm

(

x: Tensor,

y: Tensor,

beta: float = 1.0,

alpha: float = 1.0,

name: str | None = None

)

Tensor

[source]

baddbmm¶

-

baddbmm

Perform batch matrix multiplication for input $x$ and $y$. $input$ is added to the final result. The equation is:

\[Out = alpha * x * y + beta * input\]$Input$, $x$ and $y$ can carry the LoD (Level of Details) information, or not. But the output only shares the LoD information with input $input$.

- Parameters

-

input (Tensor) – The input Tensor to be added to the final result.

x (Tensor) – The first input Tensor for batch matrix multiplication.

y (Tensor) – The second input Tensor for batch matrix multiplication.

beta (float, optional) – Coefficient of $input$, default is 1.

alpha (float, optional) – Coefficient of $x*y$, default is 1.

name (str|None, optional) – Name for the operation (optional, default is None). For more information, please refer to api_guide_Name.

- Returns

-

The output Tensor of baddbmm.

- Return type

-

Tensor

Examples

>>> import paddle >>> x = paddle.ones([2, 2, 2]) >>> y = paddle.ones([2, 2, 2]) >>> input = paddle.ones([2, 2, 2]) >>> out = paddle.baddbmm(input=input, x=x, y=y, beta=0.5, alpha=5.0) >>> out Tensor(shape=[2, 2, 2], dtype=float32, place=Place(cpu), stop_gradient=True, [[[10.50000000, 10.50000000], [10.50000000, 10.50000000]], [[10.50000000, 10.50000000], [10.50000000, 10.50000000]]])

-

baddbmm_

(

x: Tensor,

y: Tensor,

beta: float = 1.0,

alpha: float = 1.0,

name: str | None = None

)

Tensor

[source]

baddbmm_¶

-

Inplace version of

baddbmmAPI, the output Tensor will be inplaced with inputinput. Please refer to baddbmm.

-

bernoulli_

(

p: float | Tensor = 0.5,

name: str | None = None

)

Tensor

[source]

bernoulli_¶

-

This is the inplace version of api

bernoulli, which returns a Tensor filled with random values sampled from a bernoulli distribution. The output Tensor will be inplaced with inputx. Please refer to bernoulli.- Parameters

-

x (Tensor) – The input tensor to be filled with random values.

p (float|Tensor, optional) – The success probability parameter of the output Tensor’s bernoulli distribution. If

pis float, all elements of the output Tensor shared the same success probability. Ifpis a Tensor, it has per-element success probabilities, and the shape should be broadcastable tox. Default is 0.5name (str|None, optional) – The default value is None. Normally there is no need for user to set this property. For more information, please refer to api_guide_Name.

- Returns

-

Tensor, A Tensor filled with random values sampled from the bernoulli distribution with success probability

p.

Examples

>>> import paddle >>> paddle.set_device('cpu') >>> paddle.seed(200) >>> x = paddle.randn([3, 4]) >>> x.bernoulli_() >>> print(x) Tensor(shape=[3, 4], dtype=float32, place=Place(cpu), stop_gradient=True, [[0., 1., 0., 1.], [1., 1., 0., 1.], [0., 1., 0., 0.]]) >>> x = paddle.randn([3, 4]) >>> p = paddle.randn([3, 1]) >>> x.bernoulli_(p) >>> print(x) Tensor(shape=[3, 4], dtype=float32, place=Place(cpu), stop_gradient=True, [[1., 1., 1., 1.], [0., 0., 0., 0.], [0., 0., 0., 0.]])

-

bfloat16

(

)

Tensor

[source]

bfloat16¶

-

Cast a Tensor to bfloat16 data type if it differs from the current dtype; otherwise, return the original Tensor. :returns: a new Tensor with bfloat16 dtype :rtype: Tensor

-

bincount

(

weights: Tensor | None = None,

minlength: int = 0,

name: str | None = None

)

Tensor

[source]

bincount¶

-

Computes frequency of each value in the input tensor.

- Parameters

-

x (Tensor) – A Tensor with non-negative integer. Should be 1-D tensor.

weights (Tensor, optional) – Weight for each value in the input tensor. Should have the same shape as input. Default is None.

minlength (int, optional) – Minimum number of bins. Should be non-negative integer. Default is 0.

name (str|None, optional) – Normally there is no need for user to set this property. For more information, please refer to api_guide_Name. Default is None.

- Returns

-

The tensor of frequency.

- Return type

-

Tensor

Examples

>>> import paddle >>> x = paddle.to_tensor([1, 2, 1, 4, 5]) >>> result1 = paddle.bincount(x) >>> print(result1) Tensor(shape=[6], dtype=int64, place=Place(cpu), stop_gradient=True, [0, 2, 1, 0, 1, 1]) >>> w = paddle.to_tensor([2.1, 0.4, 0.1, 0.5, 0.5]) >>> result2 = paddle.bincount(x, weights=w) >>> print(result2) Tensor(shape=[6], dtype=float32, place=Place(cpu), stop_gradient=True, [0. , 2.19999981, 0.40000001, 0. , 0.50000000, 0.50000000])

-

bitwise_and

(

y: Tensor,

out: Tensor | None = None,

name: str | None = None

)

Tensor

[source]

bitwise_and¶

-

Apply

bitwise_andon TensorXandY.\[Out = X \& Y\]Note

paddle.bitwise_andsupports broadcasting. If you want know more about broadcasting, please refer to please refer to Introduction to Tensor .- Parameters

-

x (Tensor) – Input Tensor of

bitwise_and. It is a N-D Tensor of bool, uint8, int8, int16, int32, int64.y (Tensor) – Input Tensor of

bitwise_and. It is a N-D Tensor of bool, uint8, int8, int16, int32, int64.out (Tensor|None, optional) – Result of

bitwise_and. It is a N-D Tensor with the same data type of input Tensor. Default: None.name (str|None, optional) – The default value is None. Normally there is no need for user to set this property. For more information, please refer to api_guide_Name.

- Returns

-

Result of

bitwise_and. It is a N-D Tensor with the same data type of input Tensor. - Return type

-

Tensor

Examples

>>> import paddle >>> x = paddle.to_tensor([-5, -1, 1]) >>> y = paddle.to_tensor([4, 2, -3]) >>> res = paddle.bitwise_and(x, y) >>> print(res) Tensor(shape=[3], dtype=int64, place=Place(cpu), stop_gradient=True, [0, 2, 1])

-

bitwise_and_

(

y: Tensor,

name: str | None = None

)

Tensor

[source]

bitwise_and_¶

-

Inplace version of

bitwise_andAPI, the output Tensor will be inplaced with inputx. Please refer to bitwise_and.

-

bitwise_invert

(

out: Tensor | None = None,

name: str | None = None

)

Tensor

[source]

bitwise_invert¶

-

Apply

bitwise_not(bitwise inversion) on Tensorx.This is an alias to the

paddle.bitwise_notfunction.\[Out = \sim X\]Note

paddle.bitwise_invertis functionally equivalent topaddle.bitwise_not.- Parameters

-

x (Tensor) – Input Tensor of

bitwise_invert. It is a N-D Tensor of bool, uint8, int8, int16, int32, int64.out (Tensor|None, optional) – Result of

bitwise_invert. It is a N-D Tensor with the same data type as the input Tensor. Default: None.name (str|None, optional) – The default value is None. This property is typically not set by the user. For more information, please refer to api_guide_Name.

- Returns

-

Result of

bitwise_invert. It is a N-D Tensor with the same data type as the input Tensor. - Return type

-

Tensor

Examples

>>> import paddle >>> x = paddle.to_tensor([-5, -1, 1]) >>> res = x.bitwise_invert() >>> print(res) Tensor(shape=[3], dtype=int64, place=Place(cpu), stop_gradient=True, [ 4, 0, -2])

-

bitwise_invert_

(

name: str | None = None

)

Tensor

[source]

bitwise_invert_¶

-

Inplace version of

bitwise_invertAPI, the output Tensor will be inplaced with inputx. Please refer to bitwise_invert_.

-

bitwise_left_shift

(

y: Tensor,

is_arithmetic: bool = True,

out: Tensor | None = None,

name: str | None = None

)

Tensor

[source]

bitwise_left_shift¶

-

Apply

bitwise_left_shifton TensorXandY.\[Out = X \ll Y\]Note

paddle.bitwise_left_shiftsupports broadcasting. If you want know more about broadcasting, please refer to please refer to Introduction to Tensor .- Parameters

-

x (Tensor) – Input Tensor of

bitwise_left_shift. It is a N-D Tensor of uint8, int8, int16, int32, int64.y (Tensor) – Input Tensor of

bitwise_left_shift. It is a N-D Tensor of uint8, int8, int16, int32, int64.is_arithmetic (bool, optional) – A boolean indicating whether to choose arithmetic shift, if False, means logic shift. Default True.

out (Tensor|None, optional) – Result of

bitwise_left_shift. It is a N-D Tensor with the same data type of input Tensor. Default: None.name (str|None, optional) – The default value is None. Normally there is no need for user to set this property. For more information, please refer to api_guide_Name.

- Returns

-

Result of

bitwise_left_shift. It is a N-D Tensor with the same data type of input Tensor. - Return type

-

Tensor

Examples

>>> import paddle >>> x = paddle.to_tensor([[1,2,4,8],[16,17,32,65]]) >>> y = paddle.to_tensor([[1,2,3,4,], [2,3,2,1]]) >>> paddle.bitwise_left_shift(x, y, is_arithmetic=True) Tensor(shape=[2, 4], dtype=int64, place=Place(gpu:0), stop_gradient=True, [[2 , 8 , 32 , 128], [64 , 136, 128, 130]])

>>> import paddle >>> x = paddle.to_tensor([[1,2,4,8],[16,17,32,65]]) >>> y = paddle.to_tensor([[1,2,3,4,], [2,3,2,1]]) >>> paddle.bitwise_left_shift(x, y, is_arithmetic=False) Tensor(shape=[2, 4], dtype=int64, place=Place(gpu:0), stop_gradient=True, [[2 , 8 , 32 , 128], [64 , 136, 128, 130]])

-

bitwise_left_shift_

(

y: Tensor,

is_arithmetic: bool = True,

out: Tensor | None = None,

name: str | None = None

)

Tensor

[source]

bitwise_left_shift_¶

-

Inplace version of

bitwise_left_shiftAPI, the output Tensor will be inplaced with inputx. Please refer to bitwise_left_shift.

-

bitwise_not

(

out: Tensor | None = None,

name: str | None = None

)

Tensor

[source]

bitwise_not¶

-

Apply

bitwise_noton TensorX.\[Out = \sim X\]Note

paddle.bitwise_notsupports broadcasting. If you want know more about broadcasting, please refer to please refer to Introduction to Tensor .- Parameters

-

x (Tensor) – Input Tensor of

bitwise_not. It is a N-D Tensor of bool, uint8, int8, int16, int32, int64.out (Tensor|None, optional) – Result of

bitwise_not. It is a N-D Tensor with the same data type of input Tensor. Default: None.name (str|None, optional) – The default value is None. Normally there is no need for user to set this property. For more information, please refer to api_guide_Name.

- Returns

-

Result of

bitwise_not. It is a N-D Tensor with the same data type of input Tensor. - Return type

-

Tensor

Examples

>>> import paddle >>> x = paddle.to_tensor([-5, -1, 1]) >>> res = paddle.bitwise_not(x) >>> print(res) Tensor(shape=[3], dtype=int64, place=Place(cpu), stop_gradient=True, [ 4, 0, -2])

-

bitwise_not_

(

name: str | None = None

)

Tensor

[source]

bitwise_not_¶

-

Inplace version of

bitwise_notAPI, the output Tensor will be inplaced with inputx. Please refer to bitwise_not.

-

bitwise_or

(

y: Tensor,

out: Tensor | None = None,

name: str | None = None

)

Tensor

[source]

bitwise_or¶

-

Apply

bitwise_oron TensorXandY.\[Out = X | Y\]Note

paddle.bitwise_orsupports broadcasting. If you want know more about broadcasting, please refer to please refer to Introduction to Tensor .Note

Alias Support: The parameter name

inputcan be used as an alias forx, andothercan be used as an alias fory. For example,bitwise_or(input=tensor_x, other=tensor_y, ...)is equivalent tobitwise_or(x=tensor_x, y=tensor_y, ...).- Parameters

-

x (Tensor) – Input Tensor of

bitwise_or. It is a N-D Tensor of bool, uint8, int8, int16, int32, int64. alias:input.y (Tensor) – Input Tensor of

bitwise_or. It is a N-D Tensor of bool, uint8, int8, int16, int32, int64. alias:oth.out (Tensor|None, optional) – Result of

bitwise_or. It is a N-D Tensor with the same data type of input Tensor. Default: None.name (str|None, optional) – The default value is None. Normally there is no need for user to set this property. For more information, please refer to api_guide_Name.

- Returns

-

Result of

bitwise_or. It is a N-D Tensor with the same data type of input Tensor. - Return type

-

Tensor

Examples

>>> import paddle >>> x = paddle.to_tensor([-5, -1, 1]) >>> y = paddle.to_tensor([4, 2, -3]) >>> res = paddle.bitwise_or(x, y) >>> print(res) Tensor(shape=[3], dtype=int64, place=Place(cpu), stop_gradient=True, [-1, -1, -3])

-

bitwise_or_

(

y: Tensor,

name: str | None = None

)

Tensor

[source]

bitwise_or_¶

-

Inplace version of

bitwise_orAPI, the output Tensor will be inplaced with inputx. Please refer to bitwise_or.

-

bitwise_right_shift

(

y: Tensor,

is_arithmetic: bool = True,

out: Tensor | None = None,

name: str | None = None

)

Tensor

[source]

bitwise_right_shift¶

-

Apply

bitwise_right_shifton TensorXandY.\[Out = X \gg Y\]Note

paddle.bitwise_right_shiftsupports broadcasting. If you want know more about broadcasting, please refer to please refer to Introduction to Tensor .- Parameters

-

x (Tensor) – Input Tensor of

bitwise_right_shift. It is a N-D Tensor of uint8, int8, int16, int32, int64.y (Tensor) – Input Tensor of

bitwise_right_shift. It is a N-D Tensor of uint8, int8, int16, int32, int64.is_arithmetic (bool, optional) – A boolean indicating whether to choose arithmetic shift, if False, means logic shift. Default True.

out (Tensor|None, optional) – Result of

bitwise_right_shift. It is a N-D Tensor with the same data type of input Tensor. Default: None.name (str|None, optional) – The default value is None. Normally there is no need for user to set this property. For more information, please refer to api_guide_Name.

- Returns

-

Result of

bitwise_right_shift. It is a N-D Tensor with the same data type of input Tensor. - Return type

-

Tensor

Examples

>>> import paddle >>> x = paddle.to_tensor([[10,20,40,80],[16,17,32,65]]) >>> y = paddle.to_tensor([[1,2,3,4,], [2,3,2,1]]) >>> paddle.bitwise_right_shift(x, y, is_arithmetic=True) Tensor(shape=[2, 4], dtype=int64, place=Place(gpu:0), stop_gradient=True, [[5 , 5 , 5 , 5 ], [4 , 2 , 8 , 32]])

>>> import paddle >>> x = paddle.to_tensor([[-10,-20,-40,-80],[-16,-17,-32,-65]], dtype=paddle.int8) >>> y = paddle.to_tensor([[1,2,3,4,], [2,3,2,1]], dtype=paddle.int8) >>> paddle.bitwise_right_shift(x, y, is_arithmetic=False) # logic shift Tensor(shape=[2, 4], dtype=int8, place=Place(gpu:0), stop_gradient=True, [[123, 59 , 27 , 11 ], [60 , 29 , 56 , 95 ]])

-

bitwise_right_shift_

(

y: Tensor,

is_arithmetic: bool = True,

out: Tensor | None = None,

name: str | None = None

)

Tensor

[source]

bitwise_right_shift_¶

-

Inplace version of

bitwise_right_shiftAPI, the output Tensor will be inplaced with inputx. Please refer to bitwise_left_shift.

-

bitwise_xor

(

y: Tensor,

out: Tensor | None = None,

name: str | None = None

)

Tensor

[source]

bitwise_xor¶

-

Apply

bitwise_xoron TensorXandY.\[Out = X ^\wedge Y\]Note

paddle.bitwise_xorsupports broadcasting. If you want know more about broadcasting, please refer to please refer to Introduction to Tensor .- Parameters

-

x (Tensor) – Input Tensor of

bitwise_xor. It is a N-D Tensor of bool, uint8, int8, int16, int32, int64.y (Tensor) – Input Tensor of

bitwise_xor. It is a N-D Tensor of bool, uint8, int8, int16, int32, int64.out (Tensor|None, optional) – Result of

bitwise_xor. It is a N-D Tensor with the same data type of input Tensor. Default: None.name (str|None, optional) – The default value is None. Normally there is no need for user to set this property. For more information, please refer to api_guide_Name.

- Returns

-

Result of

bitwise_xor. It is a N-D Tensor with the same data type of input Tensor. - Return type

-

Tensor

Examples

>>> import paddle >>> x = paddle.to_tensor([-5, -1, 1]) >>> y = paddle.to_tensor([4, 2, -3]) >>> res = paddle.bitwise_xor(x, y) >>> print(res) Tensor(shape=[3], dtype=int64, place=Place(cpu), stop_gradient=True, [-1, -3, -4])

-

bitwise_xor_

(

y: Tensor,

name: str | None = None

)

Tensor

[source]

bitwise_xor_¶

-

Inplace version of

bitwise_xorAPI, the output Tensor will be inplaced with inputx. Please refer to bitwise_xor.

-

block_diag

(

name: str | None = None

)

Tensor

[source]

block_diag¶

-

Create a block diagonal matrix from provided tensors.

- Parameters

-

inputs (list|tuple) –

inputsis a Tensor list or Tensor tuple, one or more tensors with 0, 1, or 2 dimensions. The data type:bool,float16,float32,float64,uint8,int8,int16,int32,int64,bfloat16,complex64,complex128.name (str|None, optional) – Name for the operation (optional, default is None).

- Returns

-

Tensor, A

Tensor. The data type is same asinputs.

Examples

>>> import paddle >>> A = paddle.to_tensor([[4], [3], [2]]) >>> B = paddle.to_tensor([7, 6, 5]) >>> C = paddle.to_tensor(1) >>> D = paddle.to_tensor([[5, 4, 3], [2, 1, 0]]) >>> E = paddle.to_tensor([[8, 7], [7, 8]]) >>> out = paddle.block_diag([A, B, C, D, E]) >>> print(out) Tensor(shape=[9, 10], dtype=int64, place=Place(gpu:0), stop_gradient=True, [[4, 0, 0, 0, 0, 0, 0, 0, 0, 0], [3, 0, 0, 0, 0, 0, 0, 0, 0, 0], [2, 0, 0, 0, 0, 0, 0, 0, 0, 0], [0, 7, 6, 5, 0, 0, 0, 0, 0, 0], [0, 0, 0, 0, 1, 0, 0, 0, 0, 0], [0, 0, 0, 0, 0, 5, 4, 3, 0, 0], [0, 0, 0, 0, 0, 2, 1, 0, 0, 0], [0, 0, 0, 0, 0, 0, 0, 0, 8, 7], [0, 0, 0, 0, 0, 0, 0, 0, 7, 8]])

-

bmm

(

y: Tensor,

name: str | None = None,

*,

out: Tensor | None = None

)

Tensor

[source]

bmm¶

-

Applies batched matrix multiplication to two tensors.

Both of the two input tensors must be three-dimensional and share the same batch size.

If x is a (b, m, k) tensor, y is a (b, k, n) tensor, the output will be a (b, m, n) tensor.

- Parameters

-

x (Tensor) – The input Tensor.

y (Tensor) – The input Tensor.

name (str|None) – A name for this layer(optional). If set None, the layer will be named automatically. Default: None.

out (Tensor, optional) – The output tensor.

- Returns

-

The product Tensor.

- Return type

-

Tensor

Examples

>>> import paddle >>> # In imperative mode: >>> # size x: (2, 2, 3) and y: (2, 3, 2) >>> x = paddle.to_tensor([[[1.0, 1.0, 1.0], ... [2.0, 2.0, 2.0]], ... [[3.0, 3.0, 3.0], ... [4.0, 4.0, 4.0]]]) >>> y = paddle.to_tensor([[[1.0, 1.0],[2.0, 2.0],[3.0, 3.0]], ... [[4.0, 4.0],[5.0, 5.0],[6.0, 6.0]]]) >>> out = paddle.bmm(x, y) >>> print(out) Tensor(shape=[2, 2, 2], dtype=float32, place=Place(cpu), stop_gradient=True, [[[6. , 6. ], [12., 12.]], [[45., 45.], [60., 60.]]])

-

bool

(

)

Tensor

[source]

bool¶

-

Cast a Tensor to bool data type if it differs from the current dtype; otherwise, return the original Tensor. :returns: a new Tensor with bool dtype :rtype: Tensor

-

broadcast_shape

(

y_shape: Sequence[int]

)

list[int]

[source]

broadcast_shape¶

-

The function returns the shape of doing operation with broadcasting on tensors of x_shape and y_shape.

Note

If you want know more about broadcasting, please refer to Introduction to Tensor .

- Parameters

-

x_shape (list[int]|tuple[int]) – A shape of tensor.

y_shape (list[int]|tuple[int]) – A shape of tensor.

- Returns

-

list[int], the result shape.

Examples

>>> import paddle >>> shape = paddle.broadcast_shape([2, 1, 3], [1, 3, 1]) >>> shape [2, 3, 3] >>> # shape = paddle.broadcast_shape([2, 1, 3], [3, 3, 1]) >>> # ValueError (terminated with error message).

-

broadcast_shapes

(

)

list[int]

[source]

broadcast_shapes¶

-

The function returns the shape of doing operation with broadcasting on tensors of shape list.

Note

If you want know more about broadcasting, please refer to Introduction to Tensor .

- Parameters

-

*shapes (list[int]|tuple[int]) – A shape list of multiple tensors.

- Returns

-

list[int], the result shape.

Examples

>>> import paddle >>> shape = paddle.broadcast_shapes([2, 1, 3], [1, 3, 1]) >>> shape [2, 3, 3] >>> # shape = paddle.broadcast_shapes([2, 1, 3], [3, 3, 1]) >>> # ValueError (terminated with error message). >>> shape = paddle.broadcast_shapes([5, 1, 3], [1, 4, 1], [1, 1, 3]) >>> shape [5, 4, 3] >>> # shape = paddle.broadcast_shapes([5, 1, 3], [1, 4, 1], [1, 2, 3]) >>> # ValueError (terminated with error message).

-

broadcast_tensors

(

name: str | None = None

)

list[Tensor]

[source]

broadcast_tensors¶

-

Broadcast a list of tensors following broadcast semantics

Note

If you want know more about broadcasting, please refer to Introduction to Tensor .

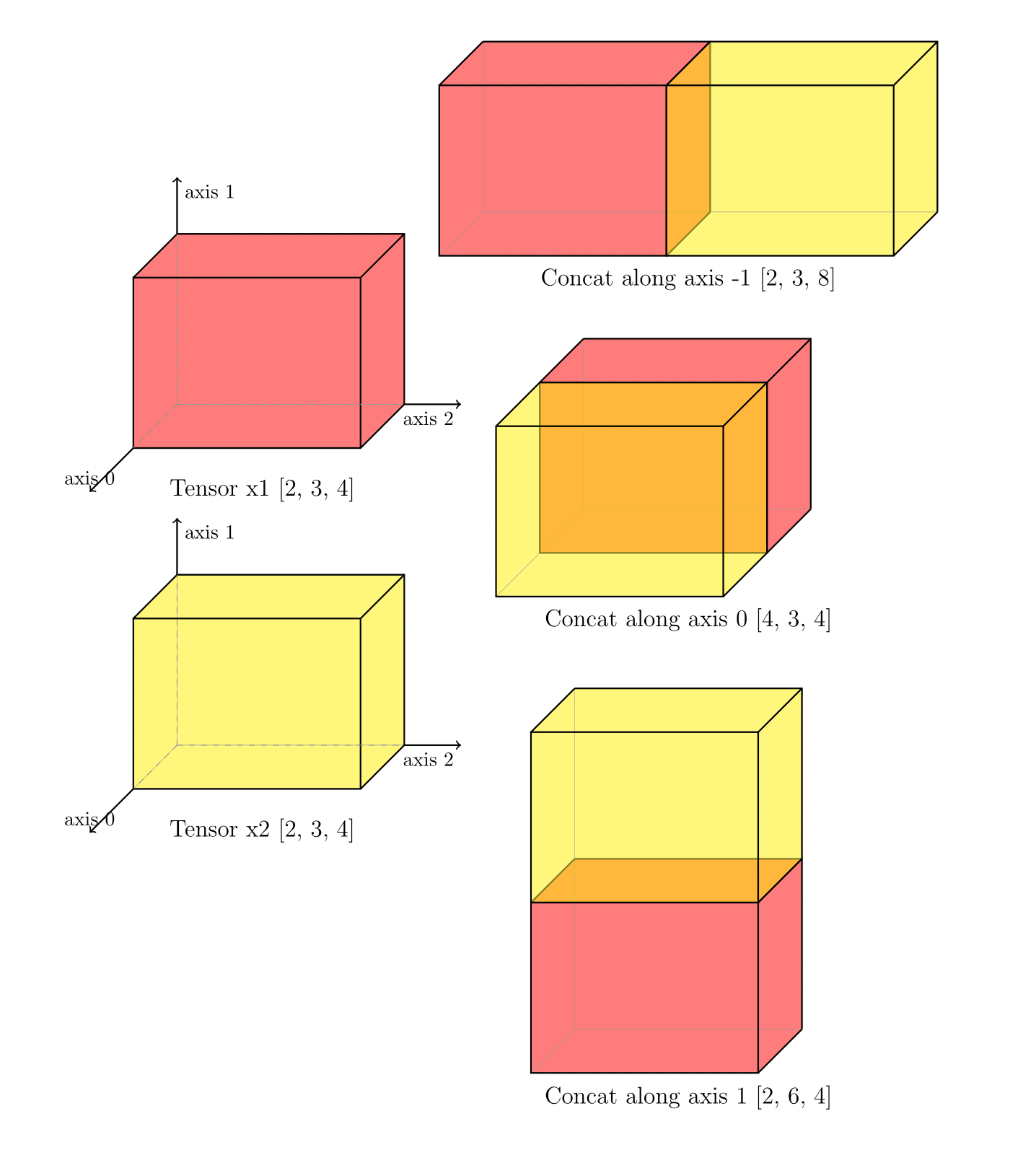

The following figure illustrates the process of broadcasting three tensors to the same dimensions. The dimensions of the three tensors are [4, 1, 3], [2, 3], and [4, 2, 1], respectively. During broadcasting, alignment starts from the last dimension, and for each dimension, either the sizes of the two tensors in that dimension are equal, or one of the tensors has a dimension of 1, or one of the tensors lacks that dimension. In the figure below, in the last dimension, Tensor3 has a size of 1, while Tensor1 and Tensor2 have sizes of 3; thus, this dimension is expanded to 3 for all tensors. In the second-to-last dimension, Tensor1 has a size of 2, and Tensor2 and Tensor3 both have sizes of 2; hence, this dimension is expanded to 2 for all tensors. In the third-to-last dimension, Tensor2 lacks this dimension, while Tensor1 and Tensor3 have sizes of 4; consequently, this dimension is expanded to 4 for all tensors. Ultimately, all tensors are expanded to [4, 2, 3].

- Parameters

-

input (list|tuple) –

inputis a Tensor list or Tensor tuple which is with data type bool, float16, float32, float64, int32, int64, complex64, complex128. All the Tensors ininputmust have same data type. Currently we only support tensors with rank no greater than 5.name (str|None, optional) – Name for the operation (optional, default is None). For more information, please refer to api_guide_Name.

- Returns

-

list(Tensor), The list of broadcasted tensors following the same order as

input.

Examples

>>> import paddle >>> x1 = paddle.rand([1, 2, 3, 4]).astype('float32') >>> x2 = paddle.rand([1, 2, 1, 4]).astype('float32') >>> x3 = paddle.rand([1, 1, 3, 1]).astype('float32') >>> out1, out2, out3 = paddle.broadcast_tensors(input=[x1, x2, x3]) >>> # out1, out2, out3: tensors broadcasted from x1, x2, x3 with shape [1,2,3,4]

-

broadcast_to

(

shape: ShapeLike,

name: str | None = None

)

Tensor